Squaller (2024-curr.)

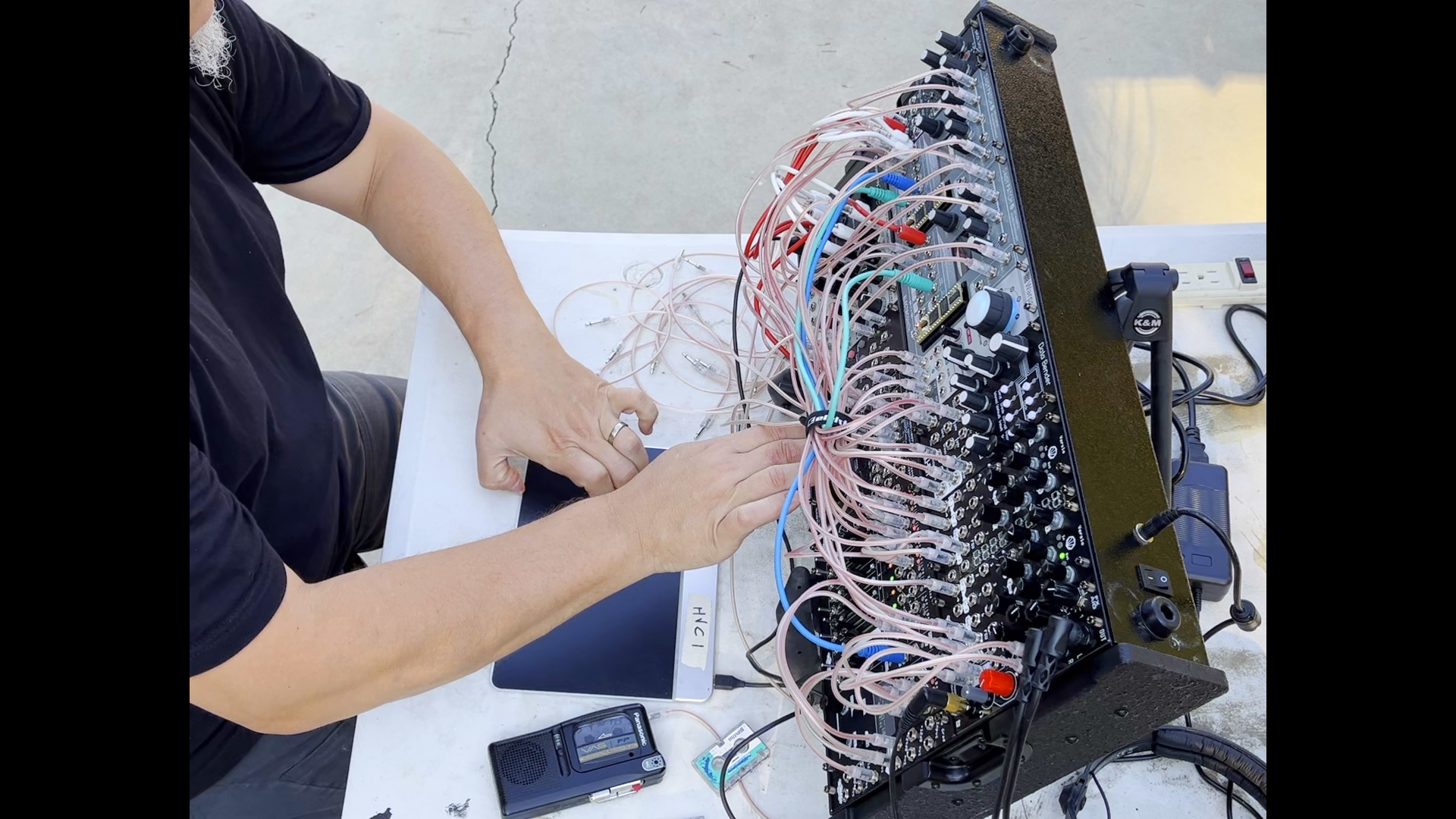

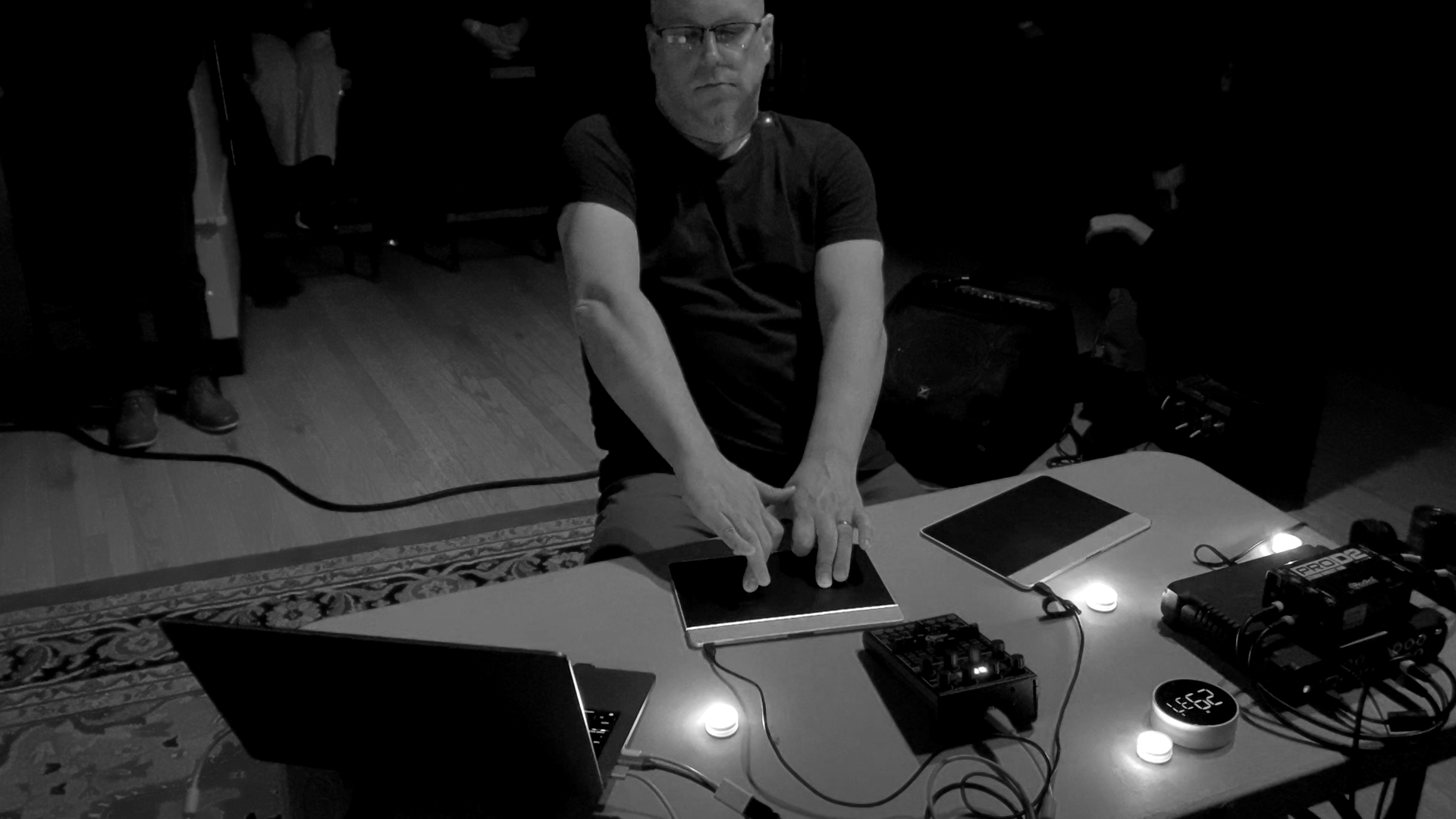

Squaller is an improvisational performance system comprised of a modular synthesizer featuring several digital modules with custom firmware made with the Electrosmith Daisy embedded computing platform and Cycling ‘74 Gen programming environment. Custom-programmed modules include erratic synthesis voices, audio and CV loopers, and idiosyncratic granulators paired with gestural controllers including a high-resolution, multitouch surface for control voltage. Experimental in sound design, Squaller harnesses chaotic oscillators, nonlinear feedback loops, unstable delays, and cross modulation to generate raw, unpredictable sonic textures. It produces an expressive range of timbres, from guttural howls to fierce harmonics, fusing structured synthesis with organic unpredictability.

The Squaller system was initiated in March 2024 and has evolved over the course of several months, expanding to two cases for a total of 188 HP with a mix of digital and analog modules. The documentation here was recorded in July 2024 in a live performance setting as the work was still in development.

A primary goal of this project is to explore the affordances of digital modular synthesis and new possibilities with current workflows in embedded computing for creating custom firmware. It is intended that Squaller will evolve as new versions of custom firmware are authored.

Sluicer (2023-curr.)

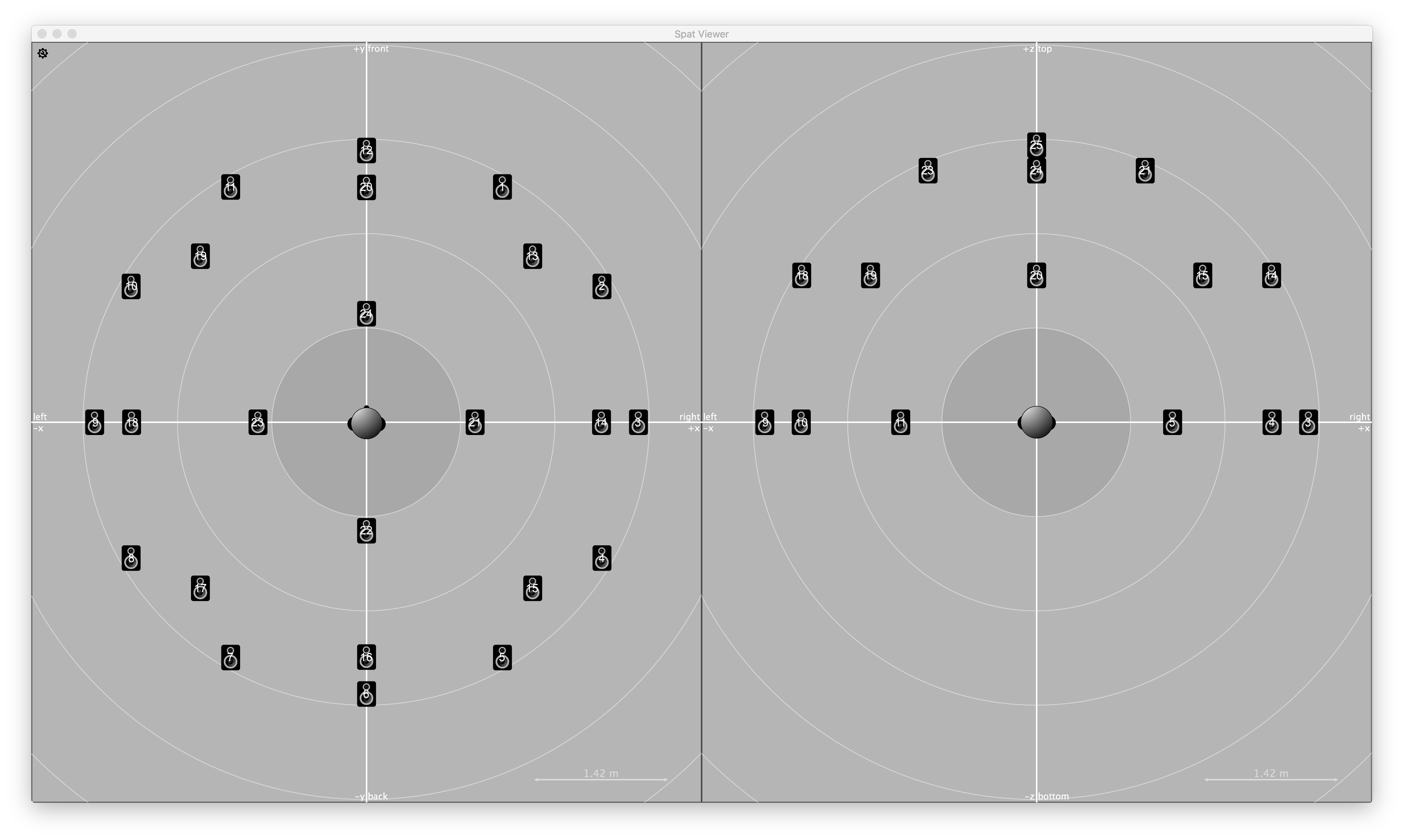

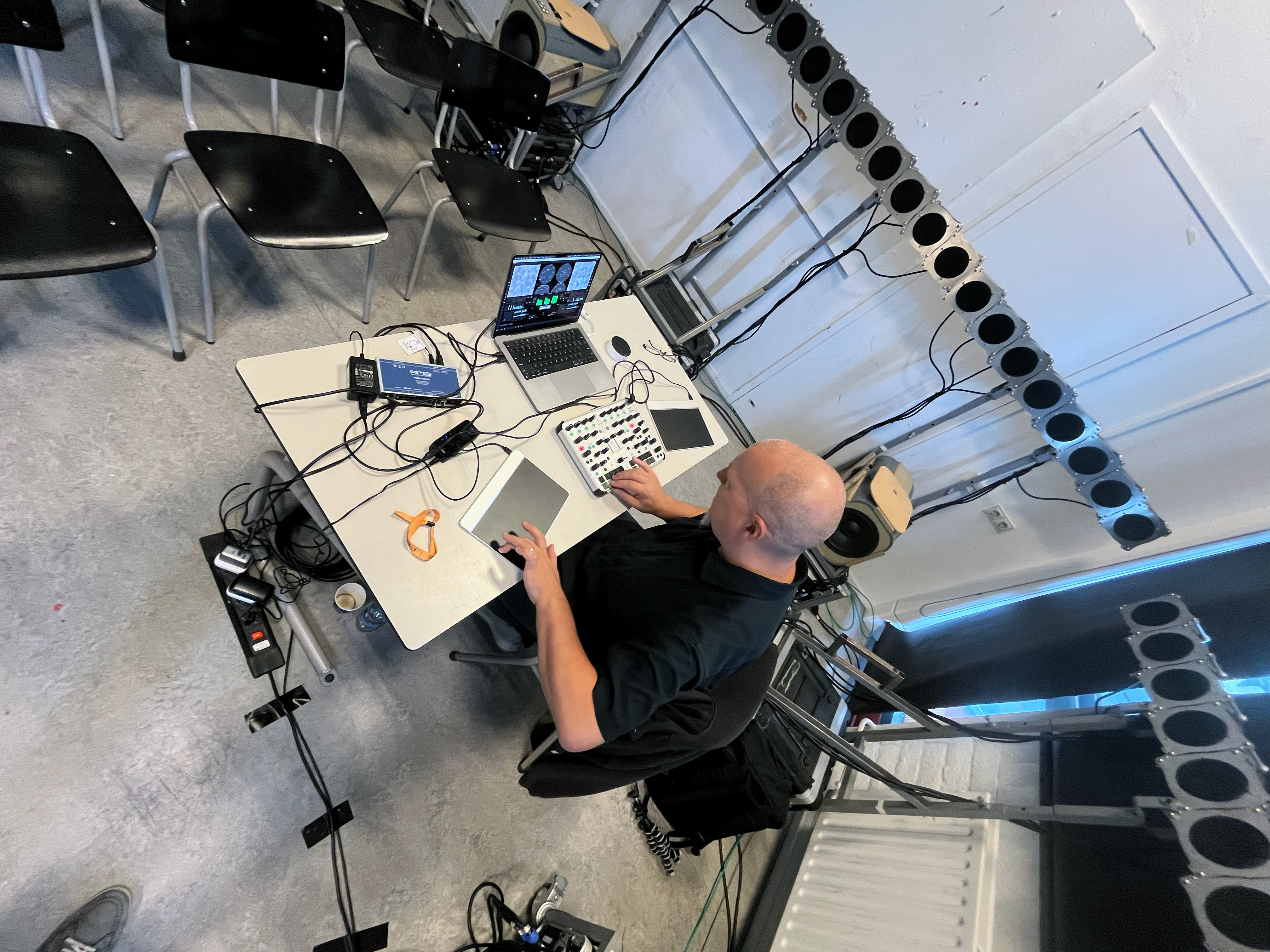

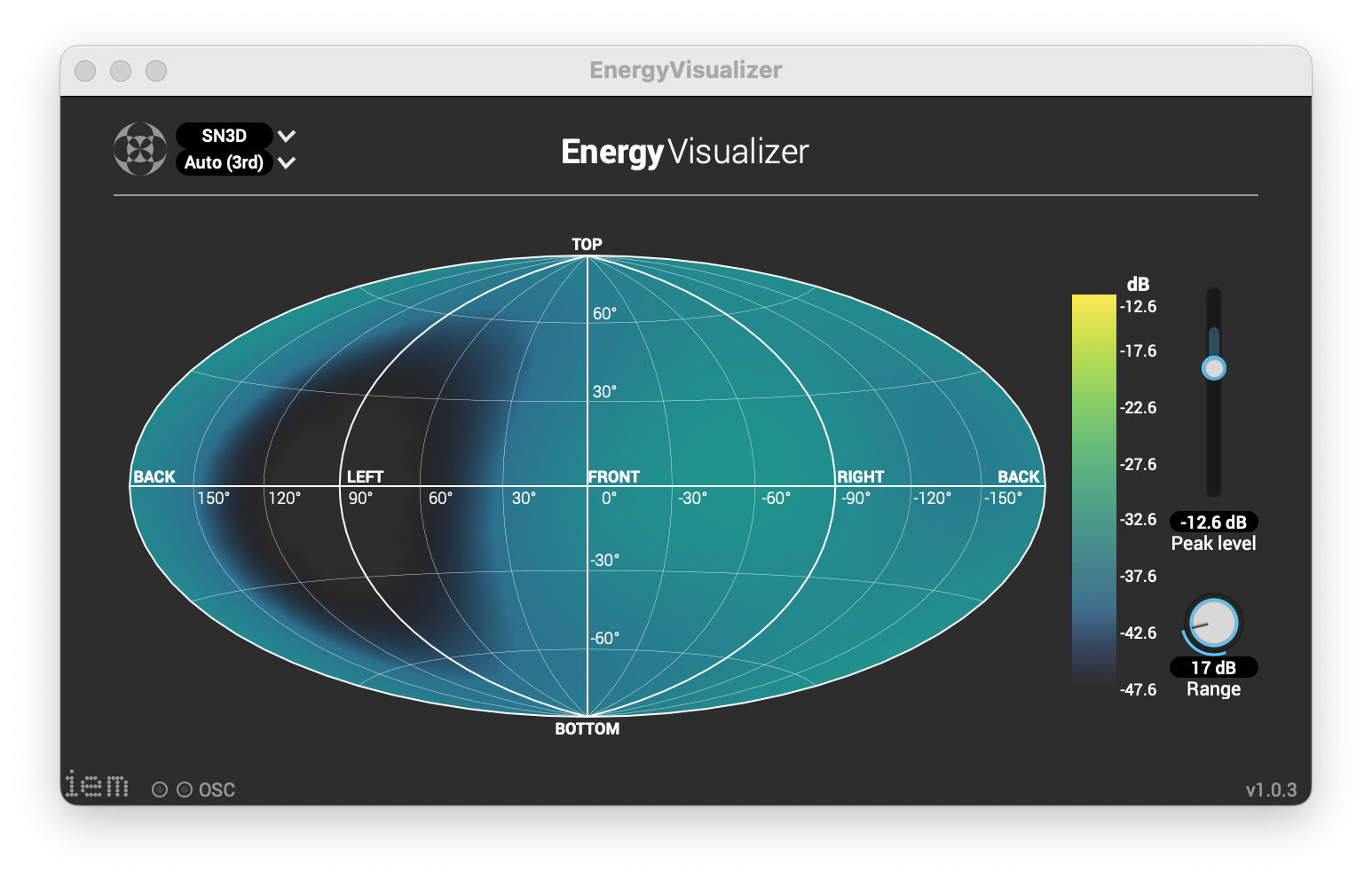

Sluicer is a performance system for spatial audio improvisation, adaptable to various output channel configurations from stereo to high density loudspeaker arrays. In this work, two 20-voice, erratic synthesizers operate as a roving “chorus” under the player’s direction. Both synths have a series of multichannel effects designed to work specifically with high order ambisonic signals, allowing the player to create and alter spatial dimensions. As audio flows, the guiding action is like closing/opening gates in a lock on a waterway. The results are timbral and spatial churns, swells, floods and drains, motion in repetition, expansion, and contraction. Sluicer is programmed in Max with tactile interfaces being high resolution, multi-touch control surfaces and a DJ-style MIDI controller.

Among other performances, Sluicer was featured at Virginia Tech’s Cube Fest 2024 ︎︎︎ on 140 loudspeaker channels; at the New Interfaces for Musical Expression 2024 ︎︎︎ conference at HKU in Utrecht, Netherlands, prepared for the 160 loudspeaker channels of the Game of Life Wave Field Synthesis speaker array ︎︎︎; the Residual Noise fest 2025 at RISD/Brown University for the IKO 3D loudspeaker ︎︎︎; and at the International Computer Music Conference 2025 ︎︎︎ at Northeastern University on a 15.1 system.

SFFX (2022-curr.)

Work in progress

Talk from March 12, 2025 that summarizes recent advances in this work:

Background:

Since 2015, my artistic work and research has been primarily focused on an area within spatial audio involving High Density Loudspeaker Arrays (HDLA) which are typically permanent installations with 24 or more loudspeakers in a cube or hemispherical configuration. Some HDLA facilities feature arrays with hundreds of loudspeakers to provide more resolution and precision, and to support a wider range of spatial audio techniques. For this work, I have traveled to various HDLA facilities to participate in residencies and workshops and to perform/present at conferences and festivals. In 2018, I founded at RISD the Studio for Research in Sound and Technology (SRST) which houses a 25.4 channel loudspeaker array. I serve as Faculty Lead for SRST overseeing its staff, curriculum delivery, research advancement, and public engagement.

A prevalent technology for creating immersive audio experiences in HDLA facilities, SRST included, is High Order Ambisonics (HOA).

Talk from March 12, 2025 that summarizes recent advances in this work:

Background:

Since 2015, my artistic work and research has been primarily focused on an area within spatial audio involving High Density Loudspeaker Arrays (HDLA) which are typically permanent installations with 24 or more loudspeakers in a cube or hemispherical configuration. Some HDLA facilities feature arrays with hundreds of loudspeakers to provide more resolution and precision, and to support a wider range of spatial audio techniques. For this work, I have traveled to various HDLA facilities to participate in residencies and workshops and to perform/present at conferences and festivals. In 2018, I founded at RISD the Studio for Research in Sound and Technology (SRST) which houses a 25.4 channel loudspeaker array. I serve as Faculty Lead for SRST overseeing its staff, curriculum delivery, research advancement, and public engagement.

A prevalent technology for creating immersive audio experiences in HDLA facilities, SRST included, is High Order Ambisonics (HOA).

In 2021-22, I was the Project Director for a NEA grant-funded software development project that provides proof of concept for my new work in spatial audio going forward. An outcome of this work is FOAFX, a command line tool for applying spatially positioned audio effects to first order ambisonic sound files. It is currently available as a Node.js program, including source code. With this project complete and a wealth of preliminary research accomplished, I am now building on this work to create “SFFX.” SFFX is a new, multiyear software development project with the goal of producing an open package for 3d audio effect processing intended for sound designers and composers working with High Order Ambisonics (HOA). Because SFFX employs HOA in its approach, the outcomes will be scalable to a variety of loudspeaker systems, as well as to stereo headphones through binaural rendering.

SFFX aims to solve a problem encountered in audio workflows involving ambisonics, wherein several audio effect processes, (e.g. compression, distortion, noise cancellation) cannot be used with encoded 3d audio without ruining the spatial dimensions of the source file or stream. While there are some workarounds known within the 3d audio community, these methods involve combining several tools and complex signal routing which can be puzzling, detering most users. The goal is to apply an effect to the entire sound field or a region within it without entirely losing the 360° fidelity. An exciting affordance provided by the SFFX approach is that a spatially positioned audio effect can be automated to move through the sound field, resulting in a dynamic spatial wet/dry effect mix which can provide a wellspring for creativity. As a practical example, similar to a spotlight tracking a performer on a stage, SFFX could be used to focus in and increase the gain of a moving sound source while suppressing background noise.

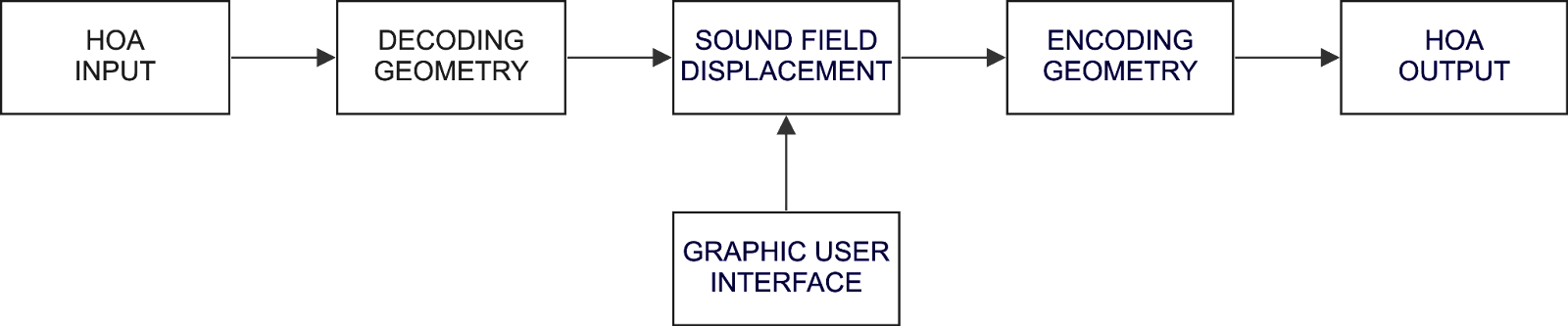

Further SFFX will offer several unconventional methods for altering 3d audio through a process I have termed, Sound Field Displacement (SFD).

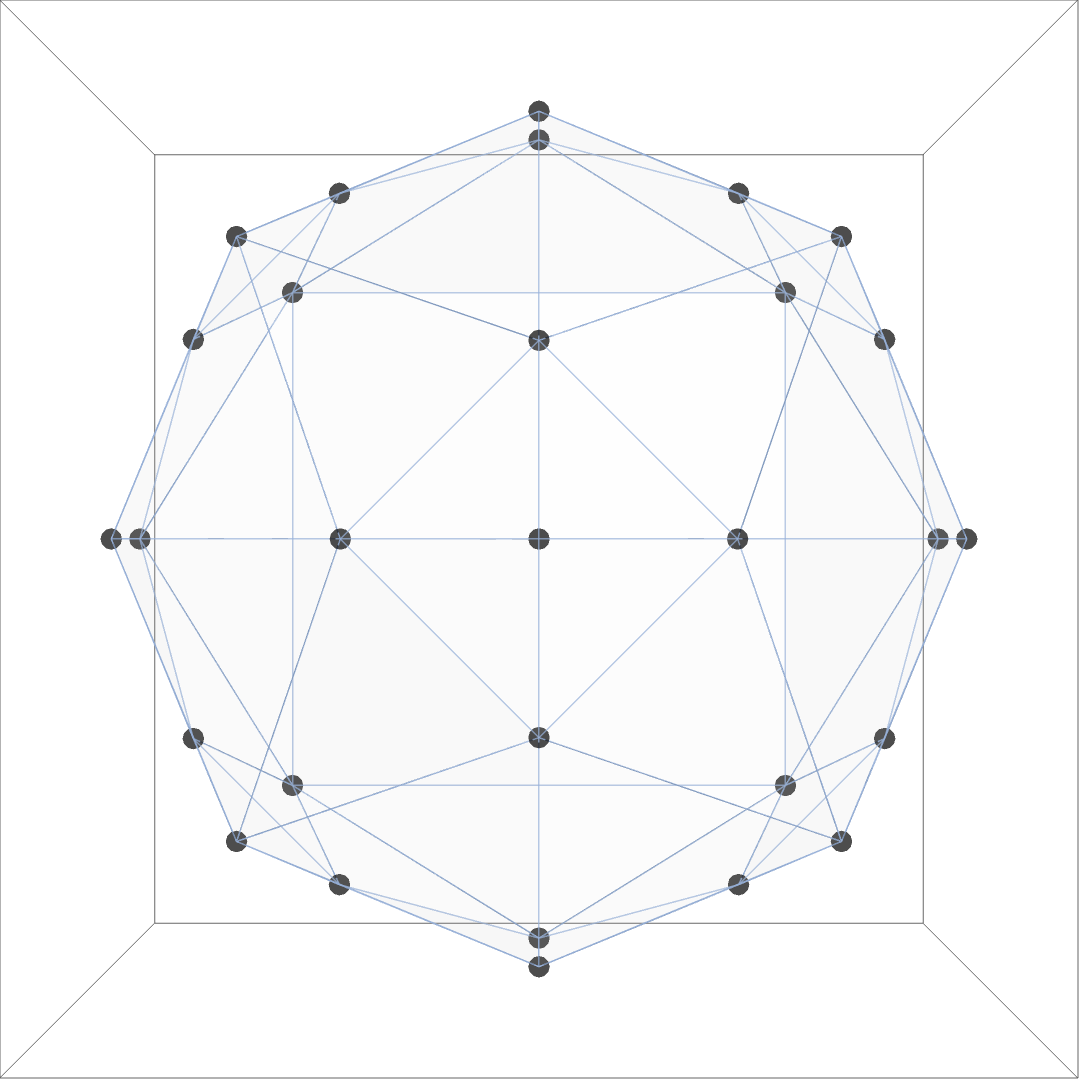

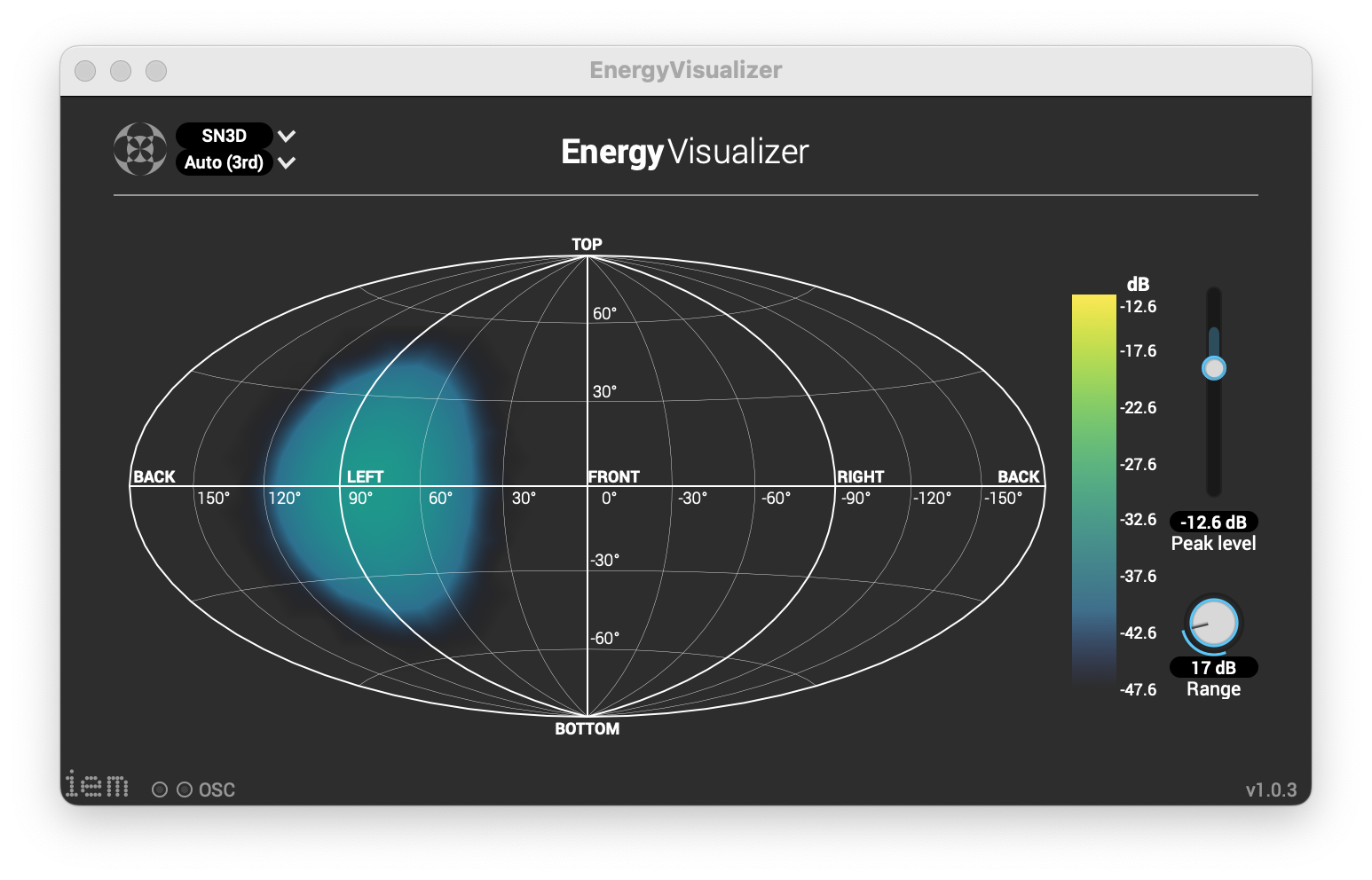

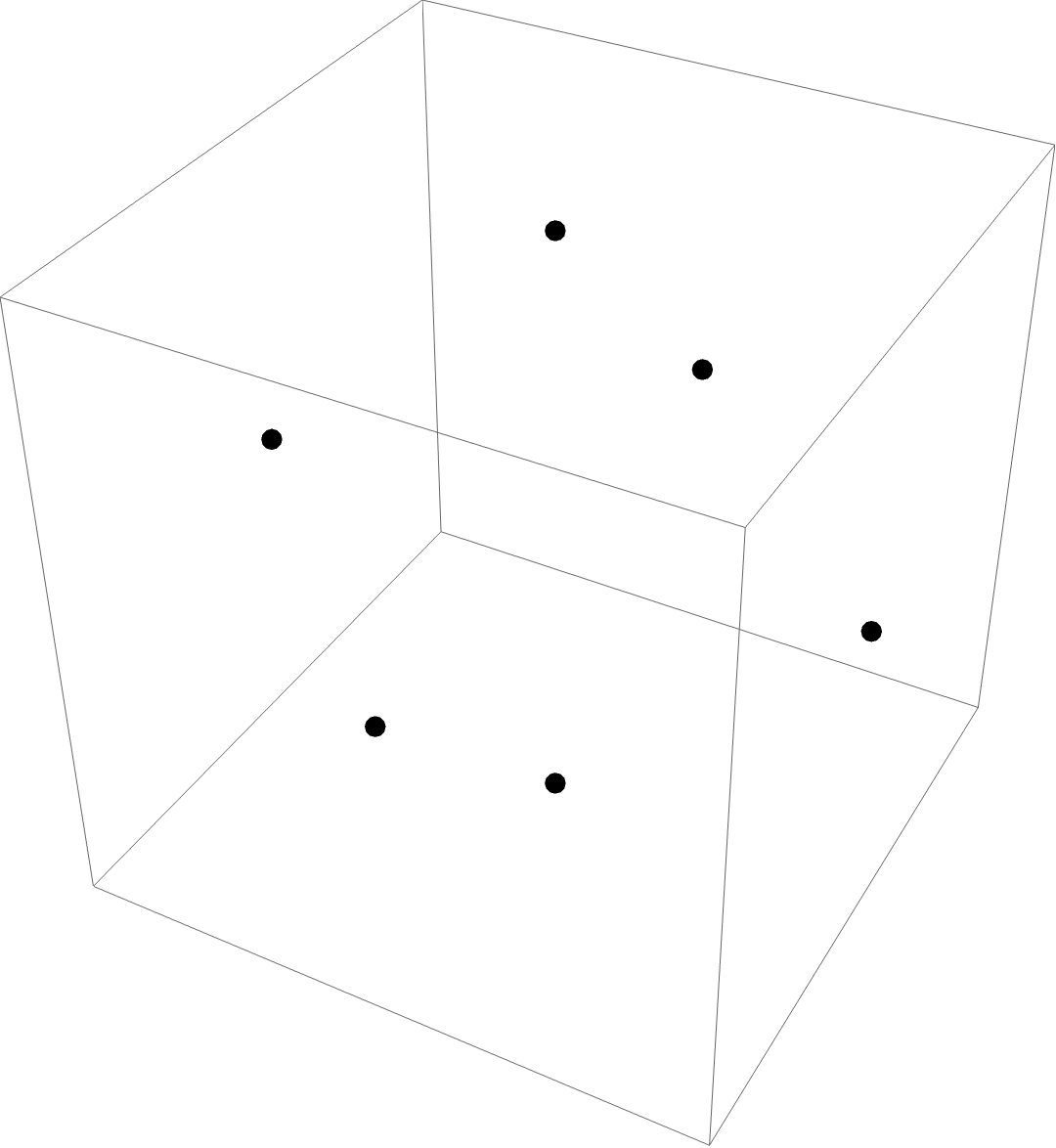

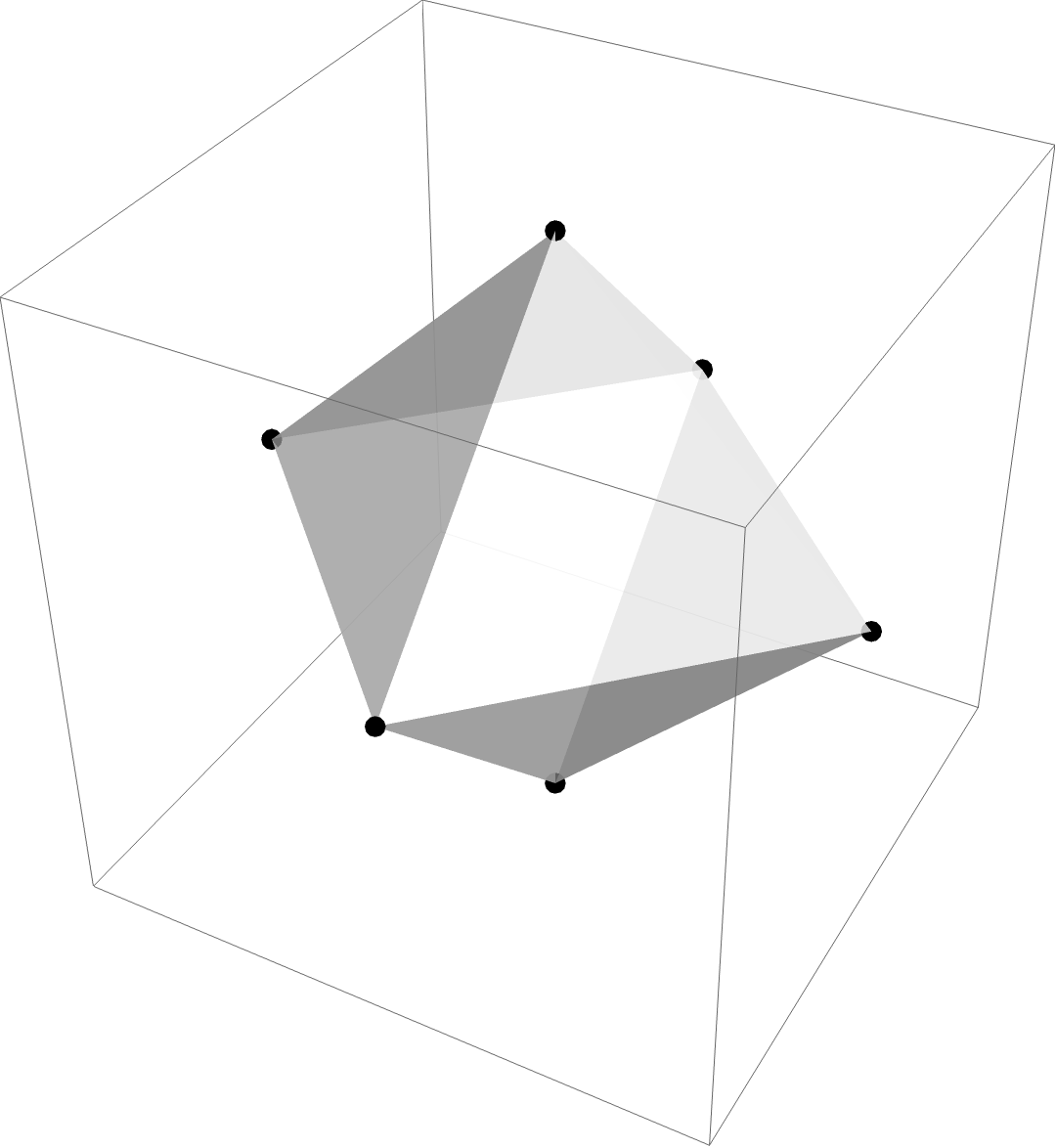

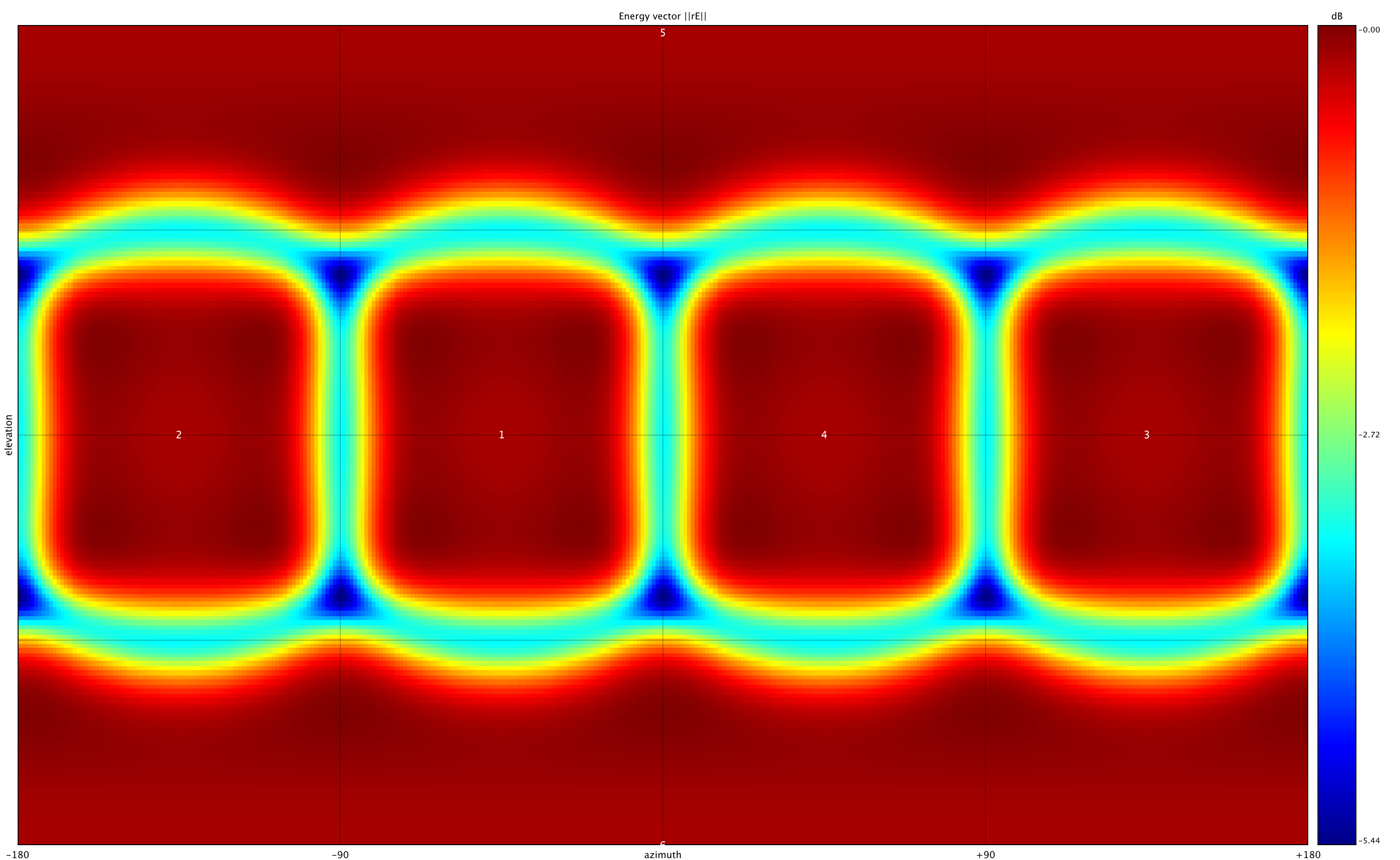

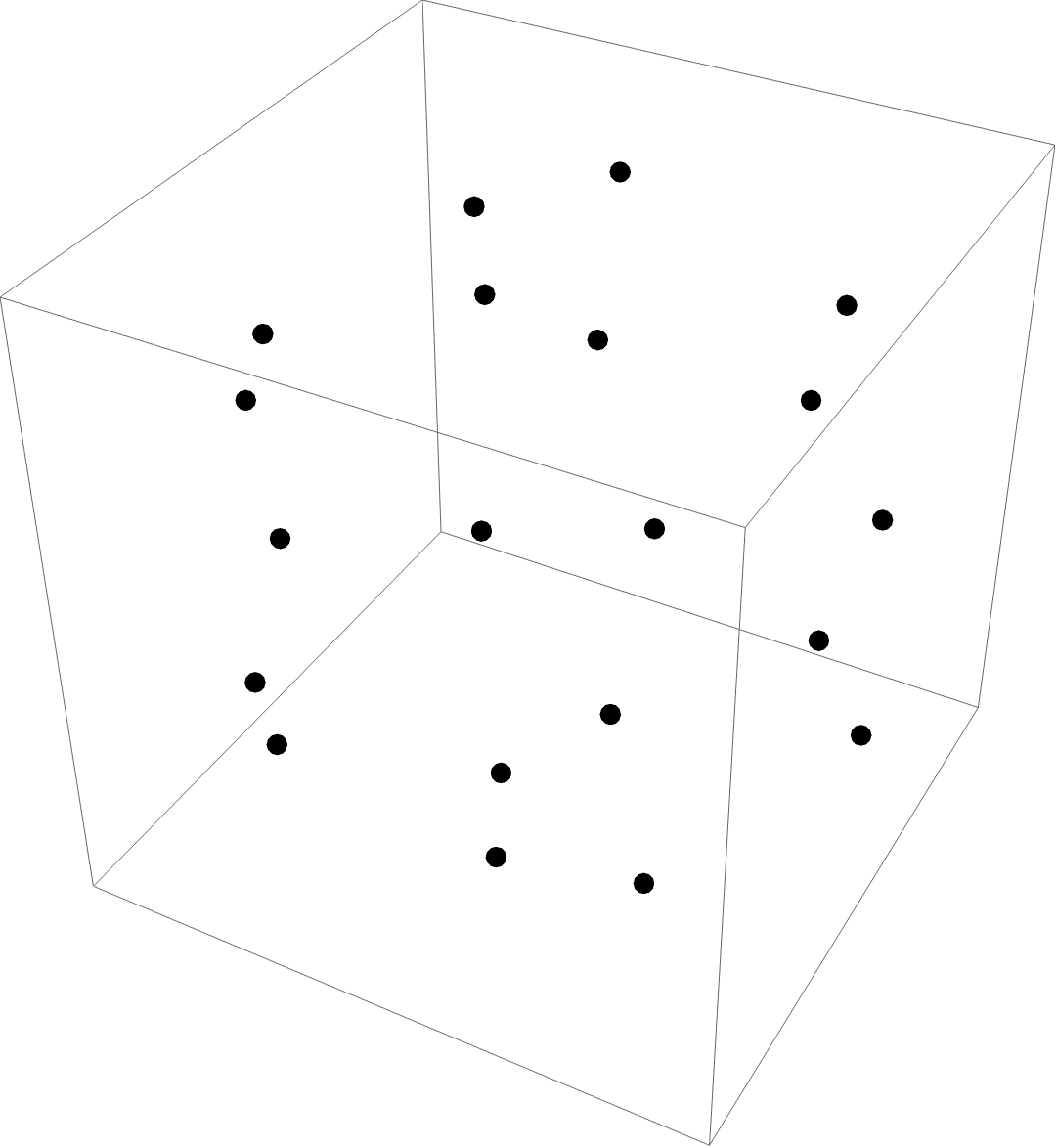

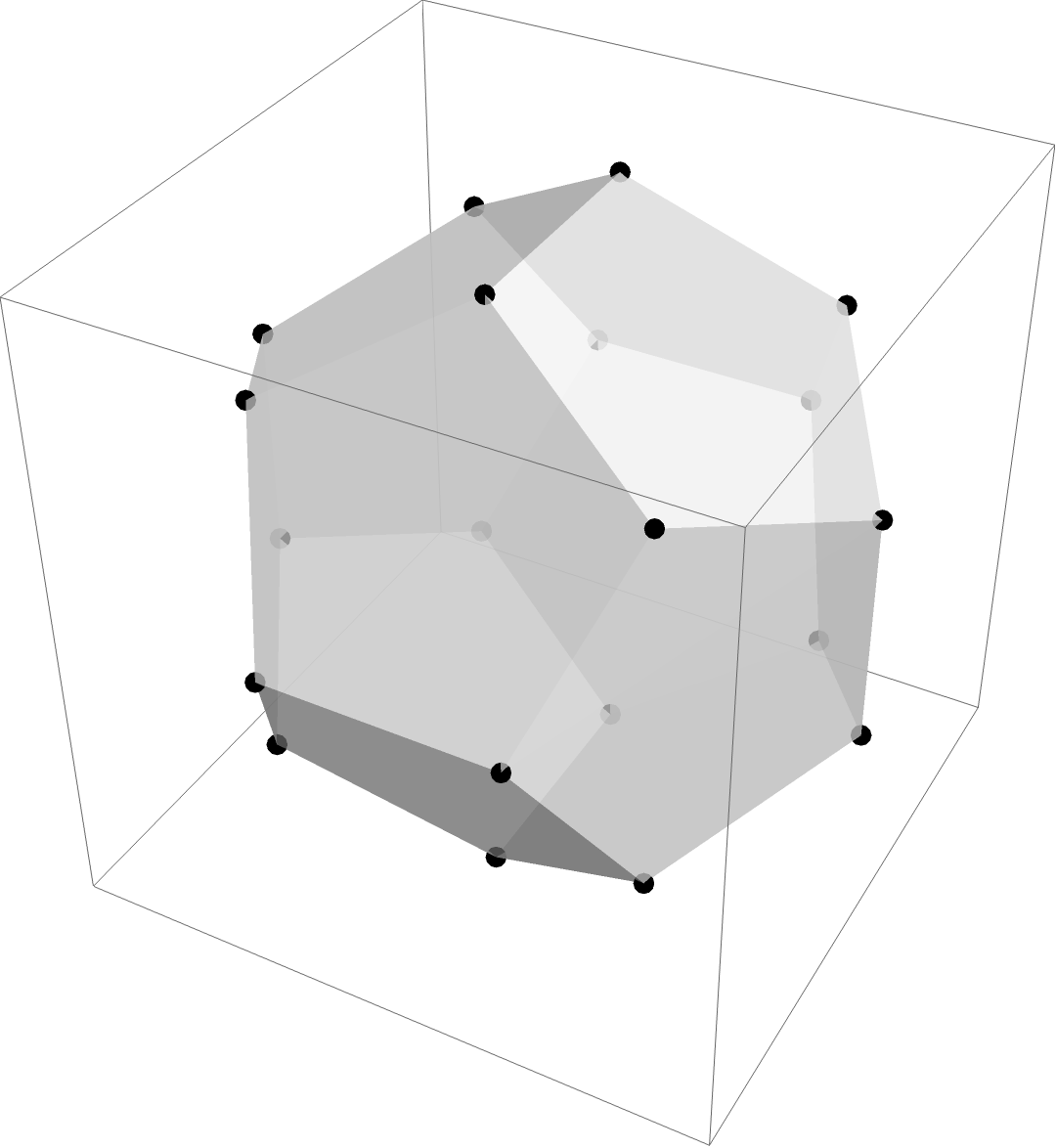

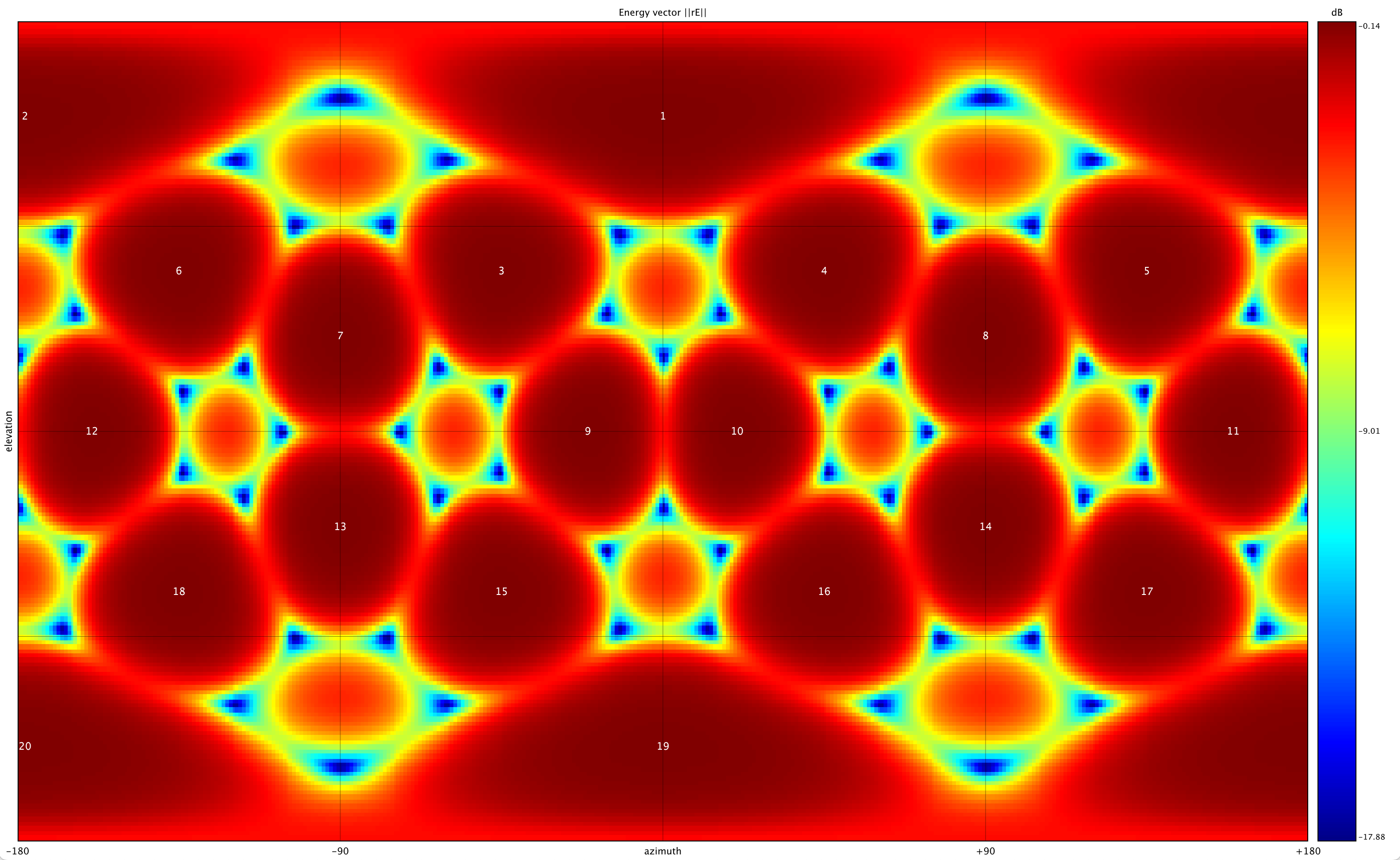

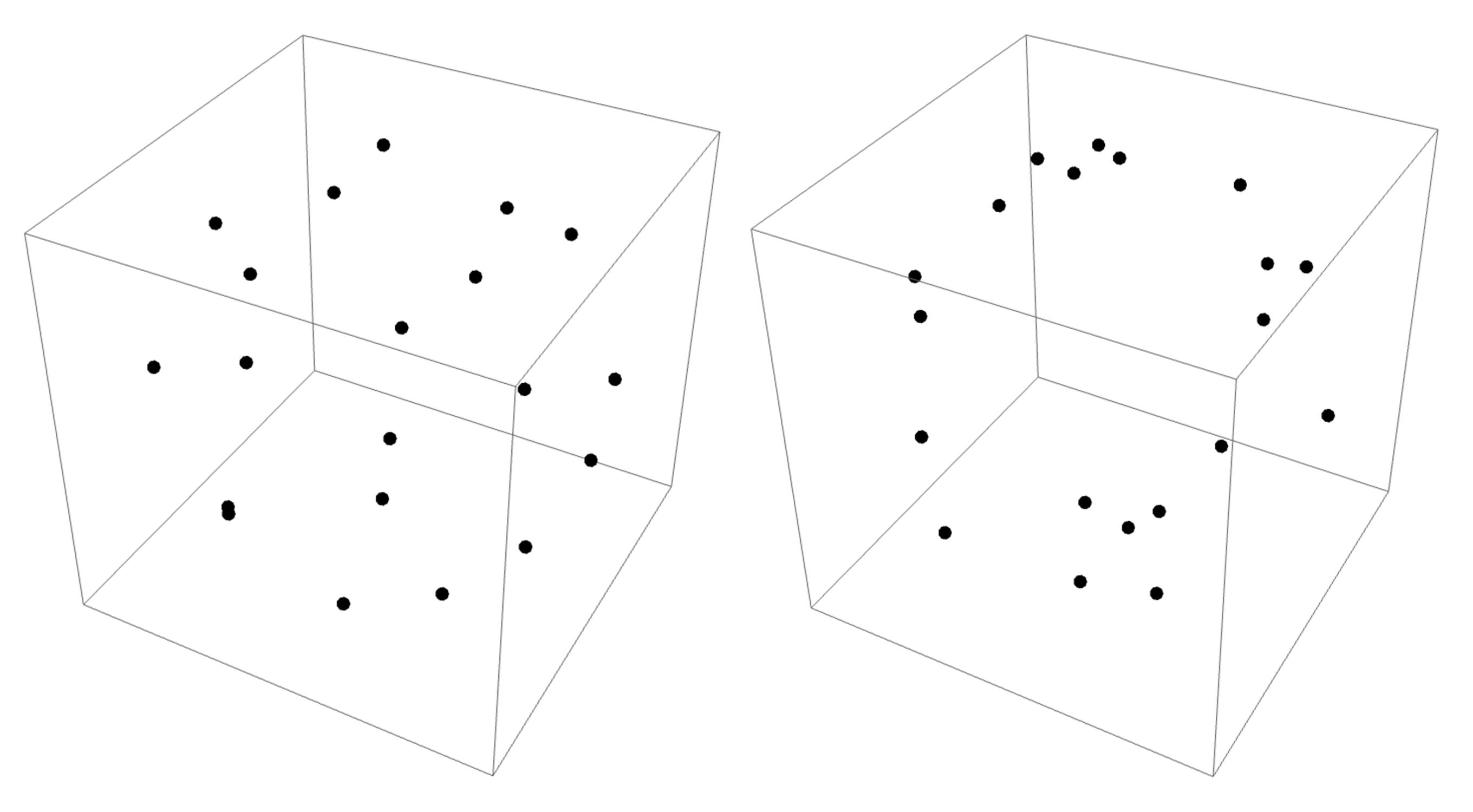

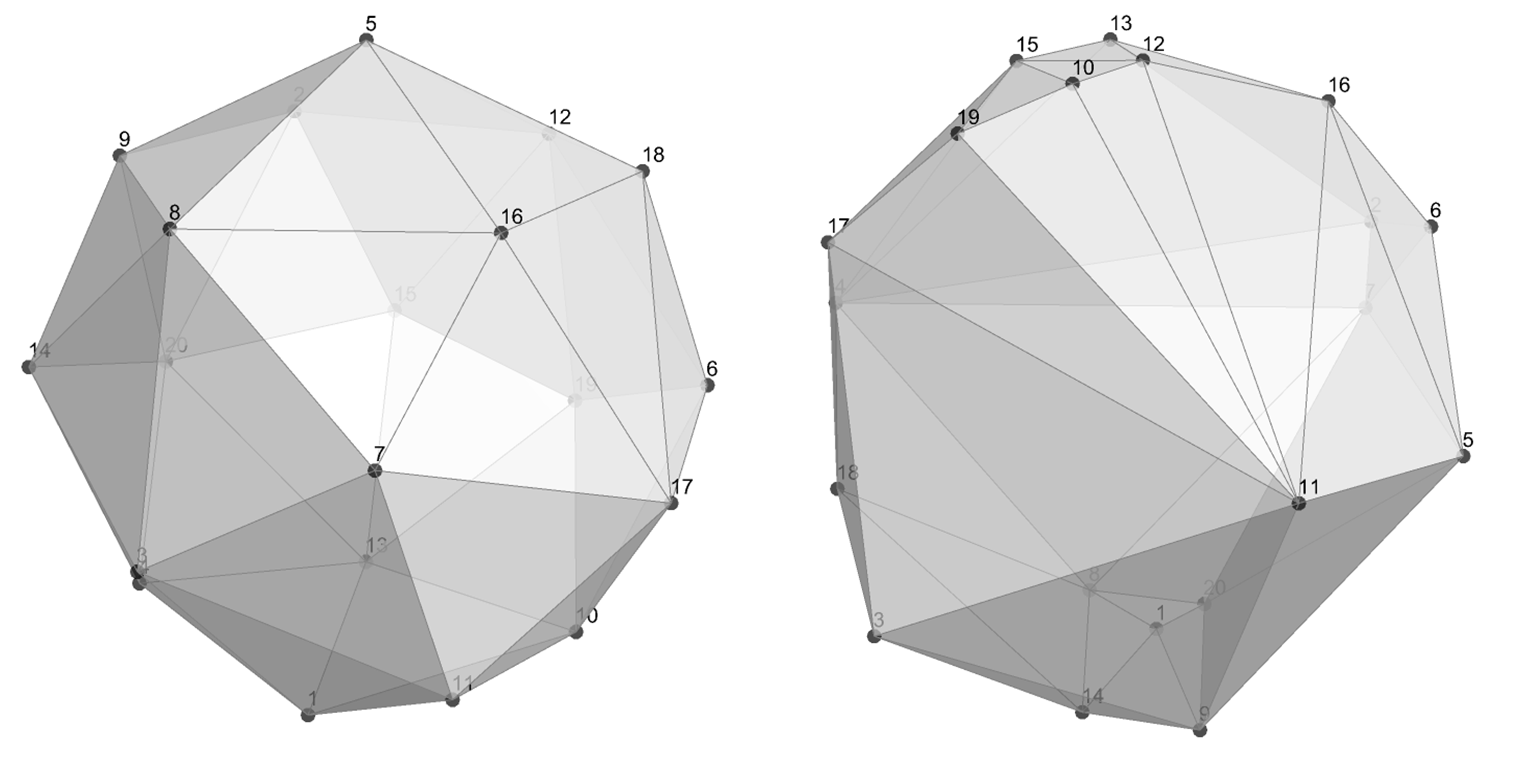

SFD relies on the polygon configurations that underpin HOA for virtual microphone/loudspeaker positions whereby each vertex represents an individual audio channel in the 3d decoding/encoding scheme. With SFD, these vertices are repositioned in uniform ways to create rotations and transpositions or nonuniform ways to create spatial artifacts and discontinuities, offering new immersive qualities.

SFD relies on the polygon configurations that underpin HOA for virtual microphone/loudspeaker positions whereby each vertex represents an individual audio channel in the 3d decoding/encoding scheme. With SFD, these vertices are repositioned in uniform ways to create rotations and transpositions or nonuniform ways to create spatial artifacts and discontinuities, offering new immersive qualities.

︎︎︎ 3d point, polygon, and energy vector representations of octahedral octahedral (6-channel) ambisonic coding

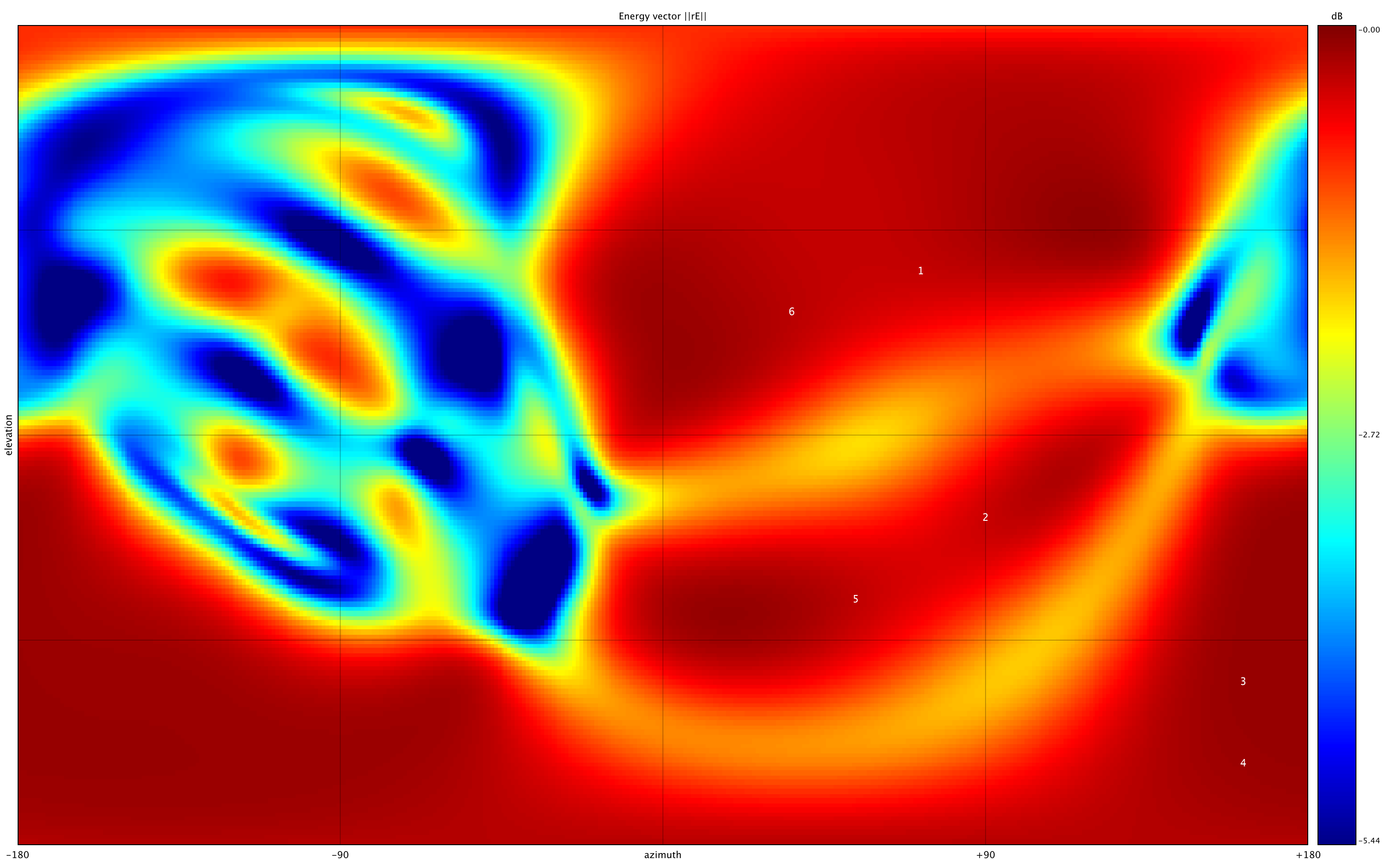

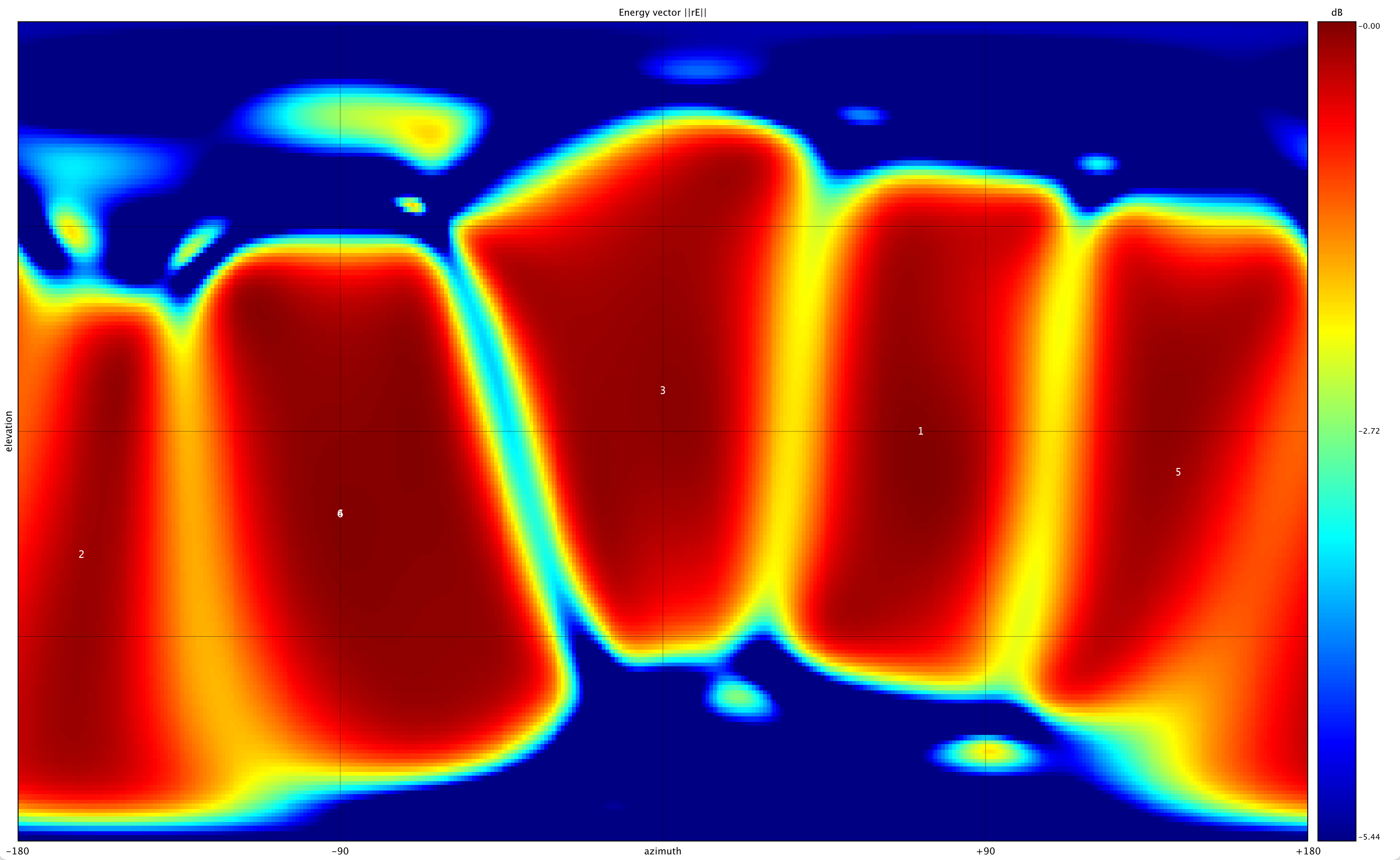

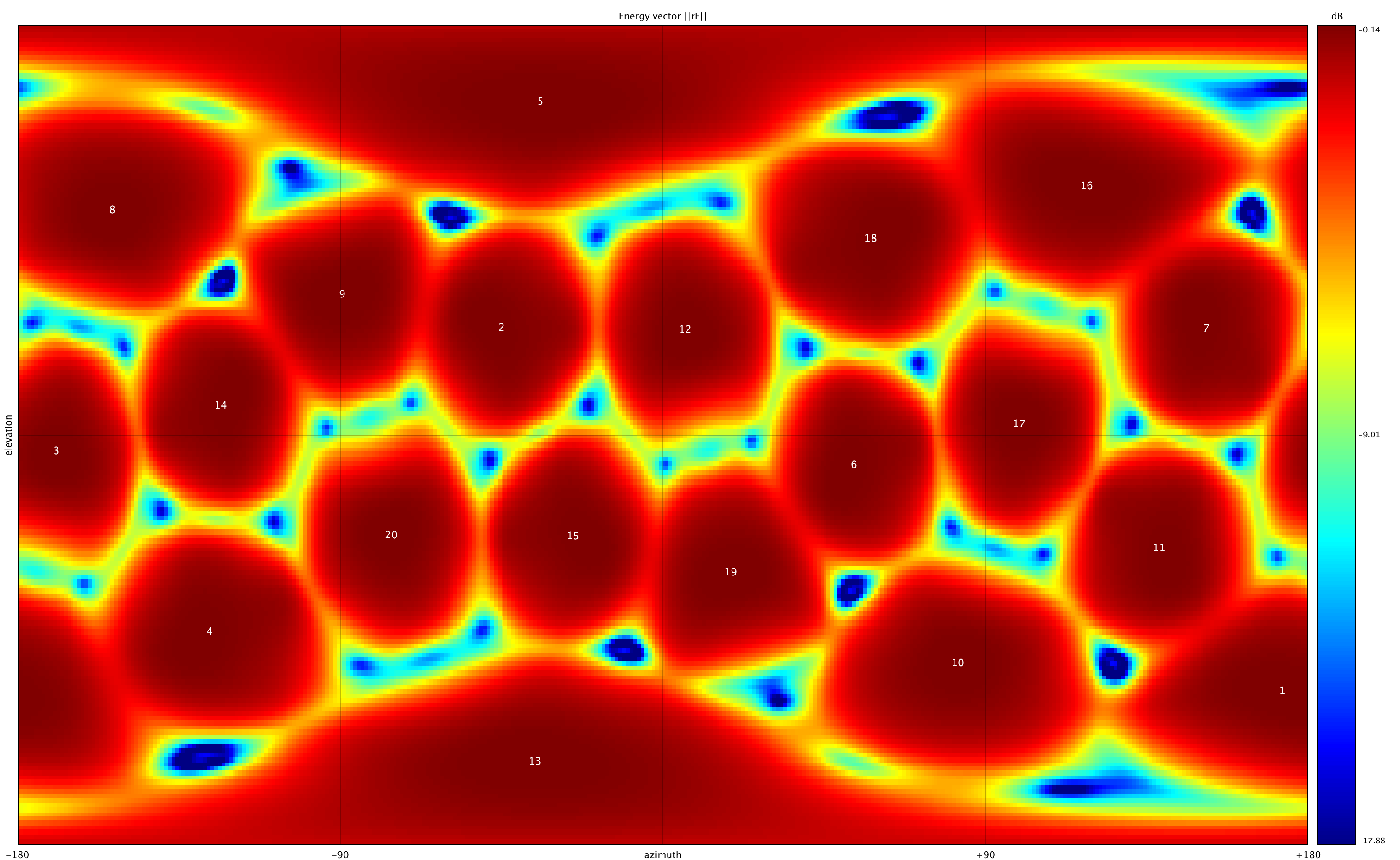

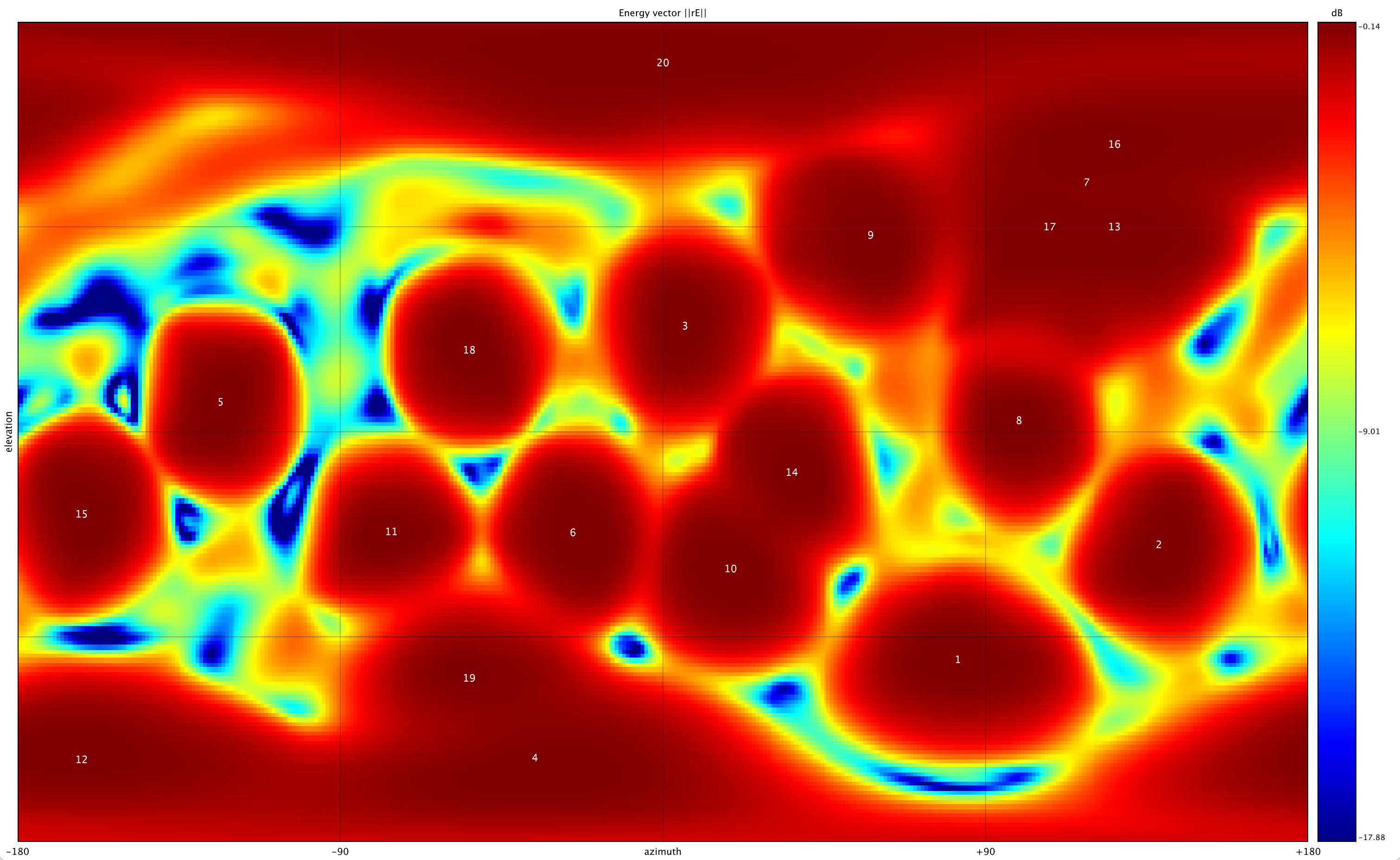

︎︎︎ Energy vector visualizations of Sound Field Displacement, 6-channel

︎︎︎ 3d point, polygon, and energy vector representations of octahedral (20-channel) ambisonic coding

︎︎︎ Energy vector visualizations of Sound Field Displacement, 20-channel

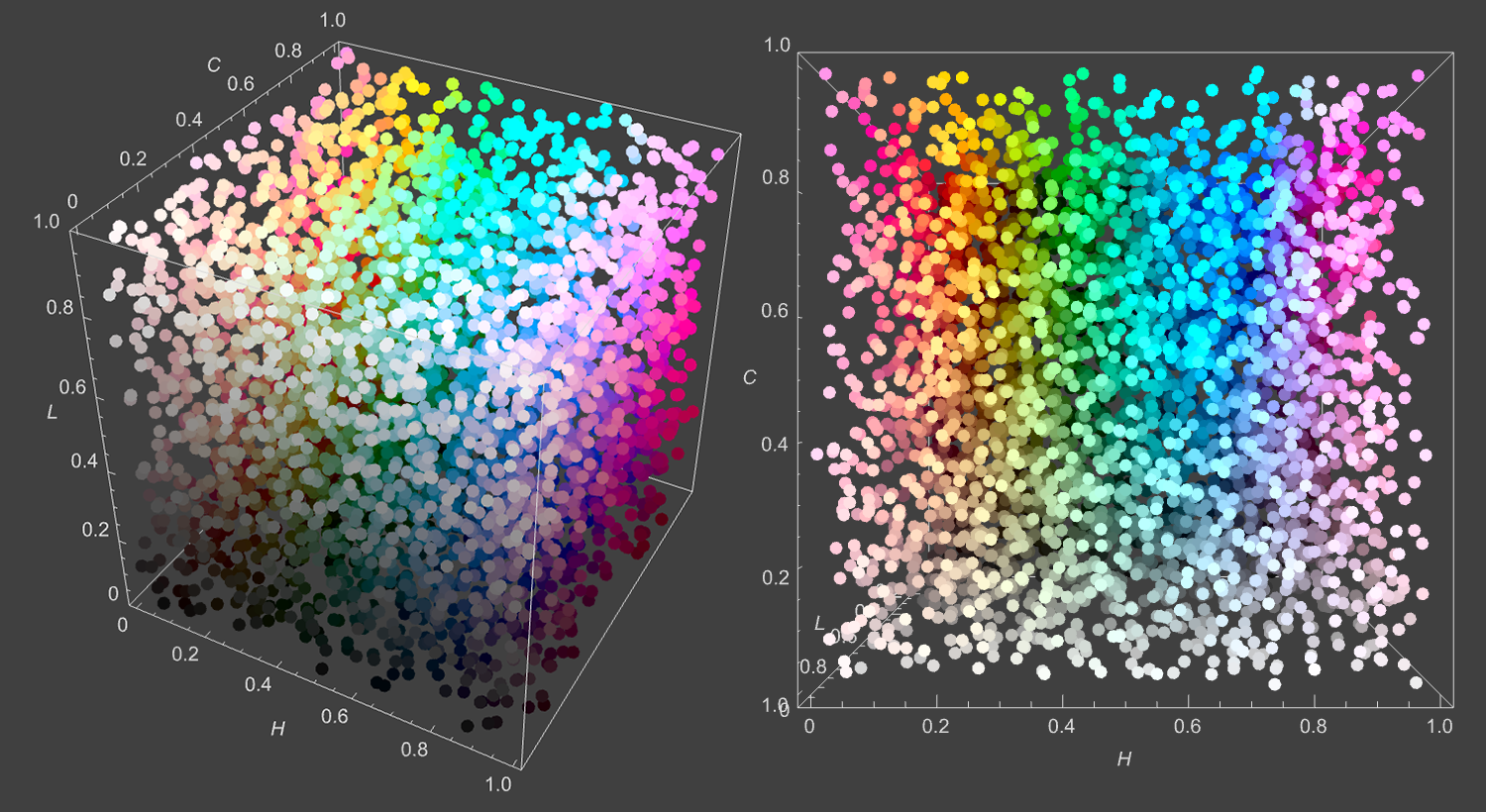

For SFD, I have created a link between color models and HOA exploring shared usage of spherical and cartesian coordinate systems. For instance, 3d color models such as Hue, Chroma, Luminance (HCL) can be mapped as XYZ spatial positions to place virtual microphones/loudspeakers for the HOA process. This repositioning of vertices based on color data gives composers a new means to graphically score or automate spatial parameters, thus providing an alternative to inadequate standard music notation systems and software compromises such as timeline-based, breakpoint editors. Furthermore, real-time visualization based on spherical coordinates (azimuth and elevation) is another aspect where salient color models can be applied to support sound design and musical analysis.

Sifting (2018-21)

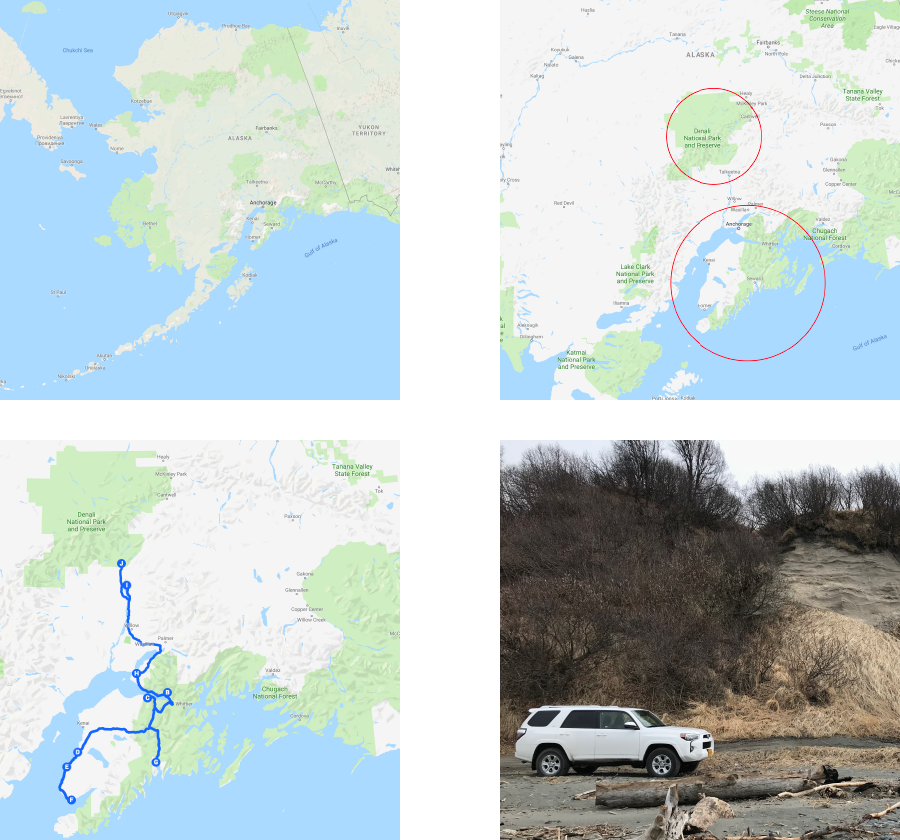

In 2018, I was awarded a Polar Lab Residency supported by the Anchorage Museum. My

project was titled Edges in the Alaskan Soundscape. Over the course of three weeks in April / May 2018, I

concentrated on spatial audio field recording in areas of the Kenai Peninsula and Denali National Park. During this

timeframe, alongside field work, I met with National Park Service scientists to learn

about acoustic monitoring and soundscape ecology from their perspective as researchers. The aim of my project was

to conduct ambisonic field recording in areas with low human population density in order to observe and document

“edges” while creating source material for new compositions and sound installations. Sifting is an outcome of this work. It debuted as a 4-channel sound installation for the Listen Up exhibition at the Anchorage Museum (April - October 2021). It was composed in third order ambisonics and may be presented on larger speaker arrays. A stereo mix is provided on this page.

In my field work, edges recurred thematically as:

![︎︎︎ Recording site: Resurrection Bay]()

Through the residency, I was able to:

In my field work, edges recurred thematically as:

- A spatial relationship, areas between the built environment and natural land

- A seasonal timeframe, the period between winter (ice break-up) and spring

- A practical situation, what was manageable for solo expeditions in difficult weather and terrain

- Ecotone, a region of transition between biological communities

- Geographical identity, the Alaskan areas of Kenai and Denali as at the edge of the Arctic

- Portend of climate change and with it a transforming phenology (alteration of life cycle events)

Through the residency, I was able to:

- Explore spatial audio field recording techniques by focusing on the use of ambisonic microphones.

- Develop and test strategies for distributed spatial audio

recording using several GPS-tracked, time-synchronized recorders.

- Create an extensive library of sound recordings for use in new compositions, performances, and installations for multichannel sound systems and high-density loudspeaker arrays; the total amount of audio captured on this trip was approximately 120 hours.

Kenai and Denali proved to be excellent sites because:

- As places new to me, they required a high level of attention.

- I could experience an expanded acoustic horizon; without competition

from human-caused noise, one can hear further, and recordings have

a related expansive quality.

- It is easy to find quietness; different figure / ground relationships

become noticeable, and new minimum technical requirements for

equipment are evident.

- There is a great diversity in the built environment, land-use, and

natural land areas accessible from Alaskan highways.

- There is extended daylight, which provides more time for exploration / experimentation.

Outwash (2019)

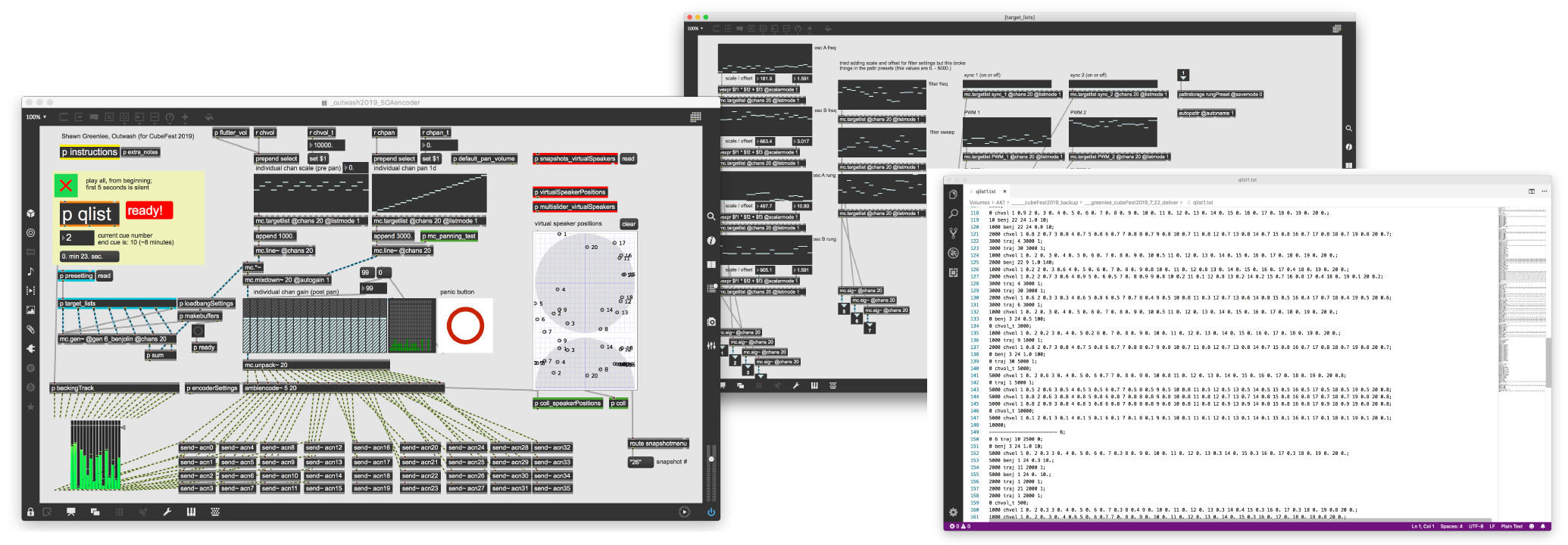

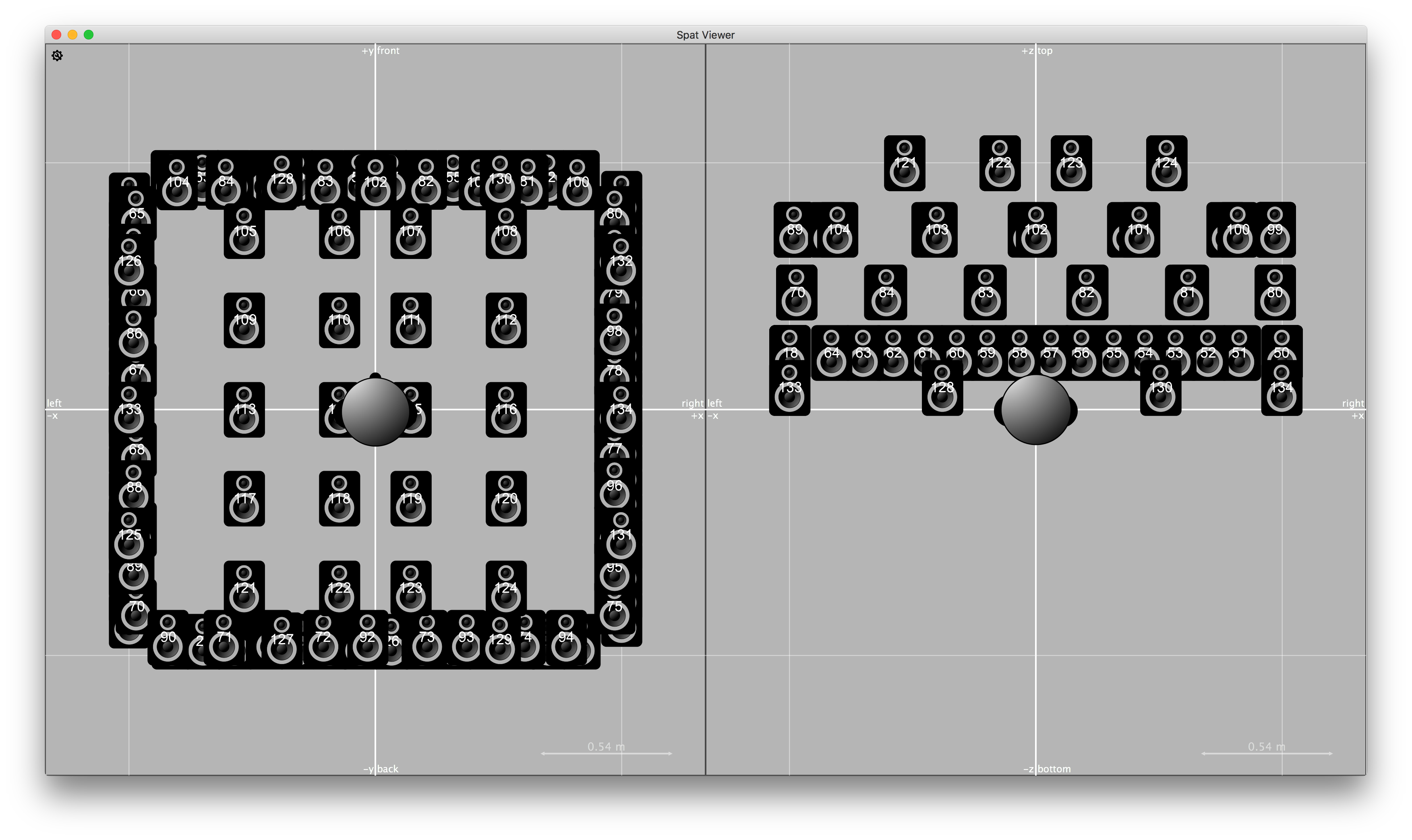

Outwash was composed in 2019 for high density loudspeaker arrays (HDLA) with high order ambisonics. Development of the piece began during a residency

at Virginia Tech’s Cube in March 2019 and it was later completed at RISD’s Studio for Research in Sound and Technology (SRST). In this work, twenty

independent voices reliant upon the same underlying erratic synthesis procedure move throughout the room in varied

spatial formations. Audible contours and modulations are produced by parametric deviation and distance fluctuations

between the voices of this ensemble. While the piece is set in its duration and sequence, real-time processes afford

unique outcomes in each performance. The piece was created in MaxMSP and runs autonomously. Outwash premiered at

the Cube Fest with a 140 channel loudspeaker array (2019 Virginia Tech, Blacksburg) and was also included in the International Computer

Music Conference (2021 Santiago).

Outwash was most recently presented in fifth order ambisonics for a 41.4 channel loudspeaker array on June 9, 2023 in concert at the Lindemann Performing Arts Center, Brown University.

Outwash was most recently presented in fifth order ambisonics for a 41.4 channel loudspeaker array on June 9, 2023 in concert at the Lindemann Performing Arts Center, Brown University.

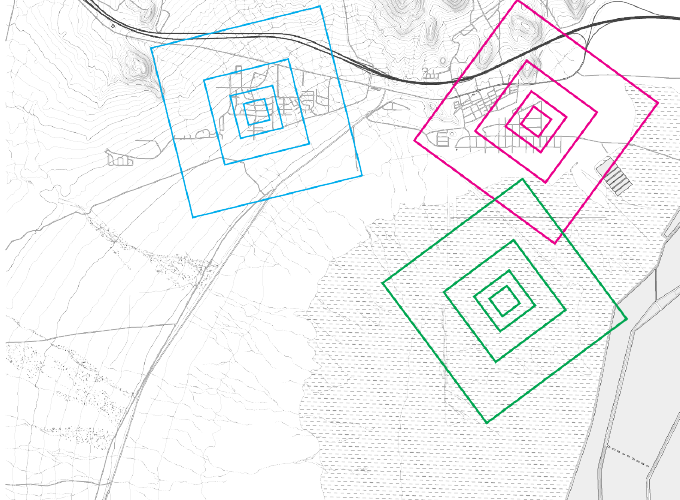

︎︎︎ Composition documentation, Virginia Tech 2019