SFFX (2022-curr.)

Work in progress

Talk from March 12, 2025 that summarizes recent advances in this work:

Background:

Since 2015, my artistic work and research has been primarily focused on an area within spatial audio involving High Density Loudspeaker Arrays (HDLA) which are typically permanent installations with 24 or more loudspeakers in a cube or hemispherical configuration. Some HDLA facilities feature arrays with hundreds of loudspeakers to provide more resolution and precision, and to support a wider range of spatial audio techniques. For this work, I have traveled to various HDLA facilities to participate in residencies and workshops and to perform/present at conferences and festivals. In 2018, I founded at RISD the Studio for Research in Sound and Technology (SRST) which houses a 25.4 channel loudspeaker array. I serve as Faculty Lead for SRST overseeing its staff, curriculum delivery, research advancement, and public engagement.

A prevalent technology for creating immersive audio experiences in HDLA facilities, SRST included, is High Order Ambisonics (HOA).

Talk from March 12, 2025 that summarizes recent advances in this work:

Background:

Since 2015, my artistic work and research has been primarily focused on an area within spatial audio involving High Density Loudspeaker Arrays (HDLA) which are typically permanent installations with 24 or more loudspeakers in a cube or hemispherical configuration. Some HDLA facilities feature arrays with hundreds of loudspeakers to provide more resolution and precision, and to support a wider range of spatial audio techniques. For this work, I have traveled to various HDLA facilities to participate in residencies and workshops and to perform/present at conferences and festivals. In 2018, I founded at RISD the Studio for Research in Sound and Technology (SRST) which houses a 25.4 channel loudspeaker array. I serve as Faculty Lead for SRST overseeing its staff, curriculum delivery, research advancement, and public engagement.

A prevalent technology for creating immersive audio experiences in HDLA facilities, SRST included, is High Order Ambisonics (HOA).

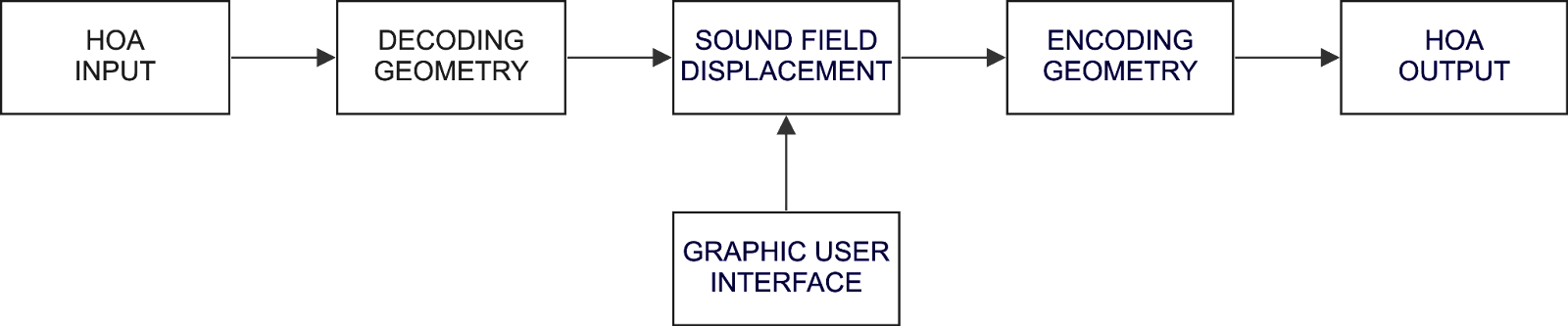

In 2021-22, I was the Project Director for a NEA grant-funded software development project that provides proof of concept for my new work in spatial audio going forward. An outcome of this work is FOAFX, a command line tool for applying spatially positioned audio effects to first order ambisonic sound files. It is currently available as a Node.js program, including source code. With this project complete and a wealth of preliminary research accomplished, I am now building on this work to create “SFFX.” SFFX is a new, multiyear software development project with the goal of producing an open package for 3d audio effect processing intended for sound designers and composers working with High Order Ambisonics (HOA). Because SFFX employs HOA in its approach, the outcomes will be scalable to a variety of loudspeaker systems, as well as to stereo headphones through binaural rendering.

SFFX aims to solve a problem encountered in audio workflows involving ambisonics, wherein several audio effect processes, (e.g. compression, distortion, noise cancellation) cannot be used with encoded 3d audio without ruining the spatial dimensions of the source file or stream. While there are some workarounds known within the 3d audio community, these methods involve combining several tools and complex signal routing which can be puzzling, detering most users. The goal is to apply an effect to the entire sound field or a region within it without entirely losing the 360° fidelity. An exciting affordance provided by the SFFX approach is that a spatially positioned audio effect can be automated to move through the sound field, resulting in a dynamic spatial wet/dry effect mix which can provide a wellspring for creativity. As a practical example, similar to a spotlight tracking a performer on a stage, SFFX could be used to focus in and increase the gain of a moving sound source while suppressing background noise.

Further SFFX will offer several unconventional methods for altering 3d audio through a process I have termed, Sound Field Displacement (SFD).

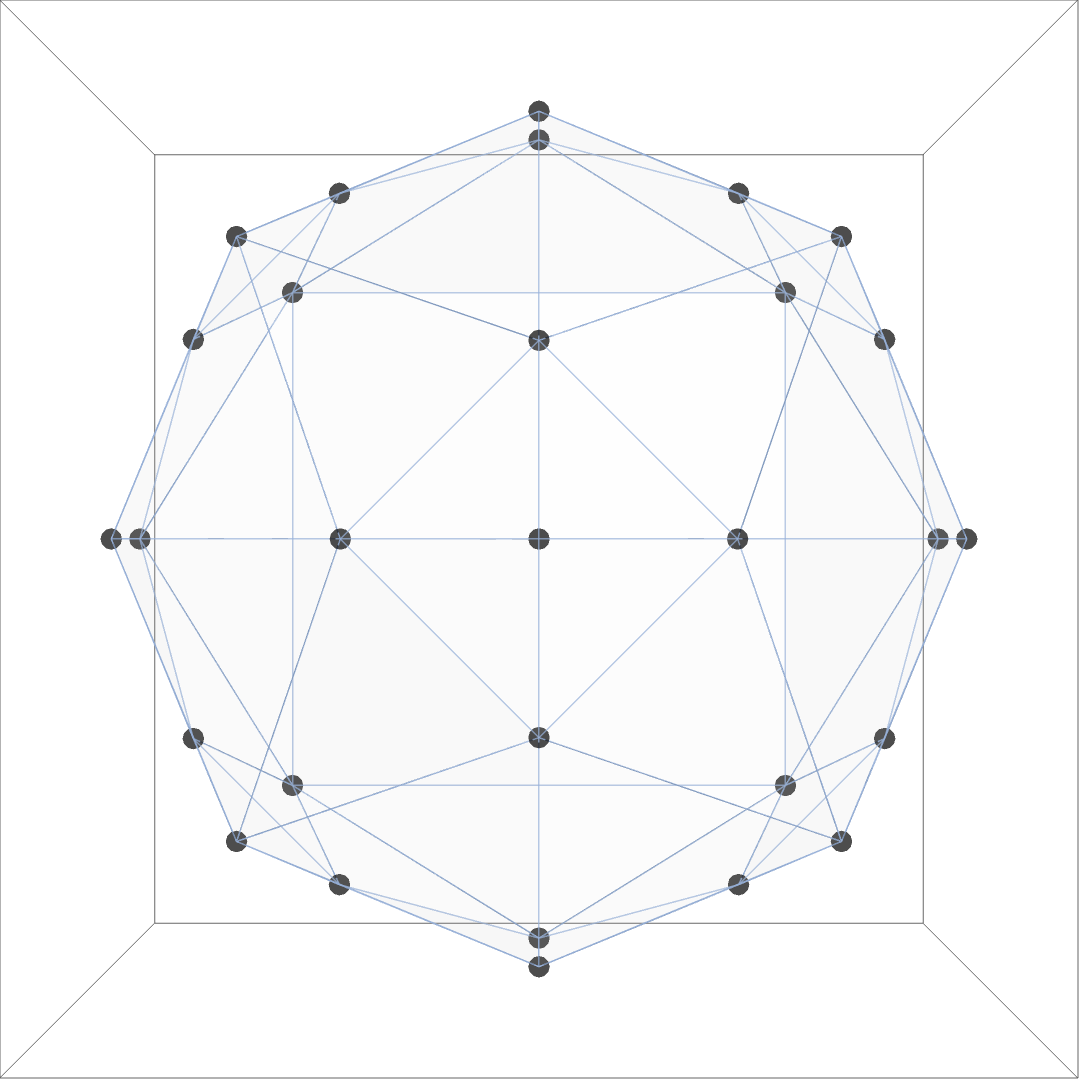

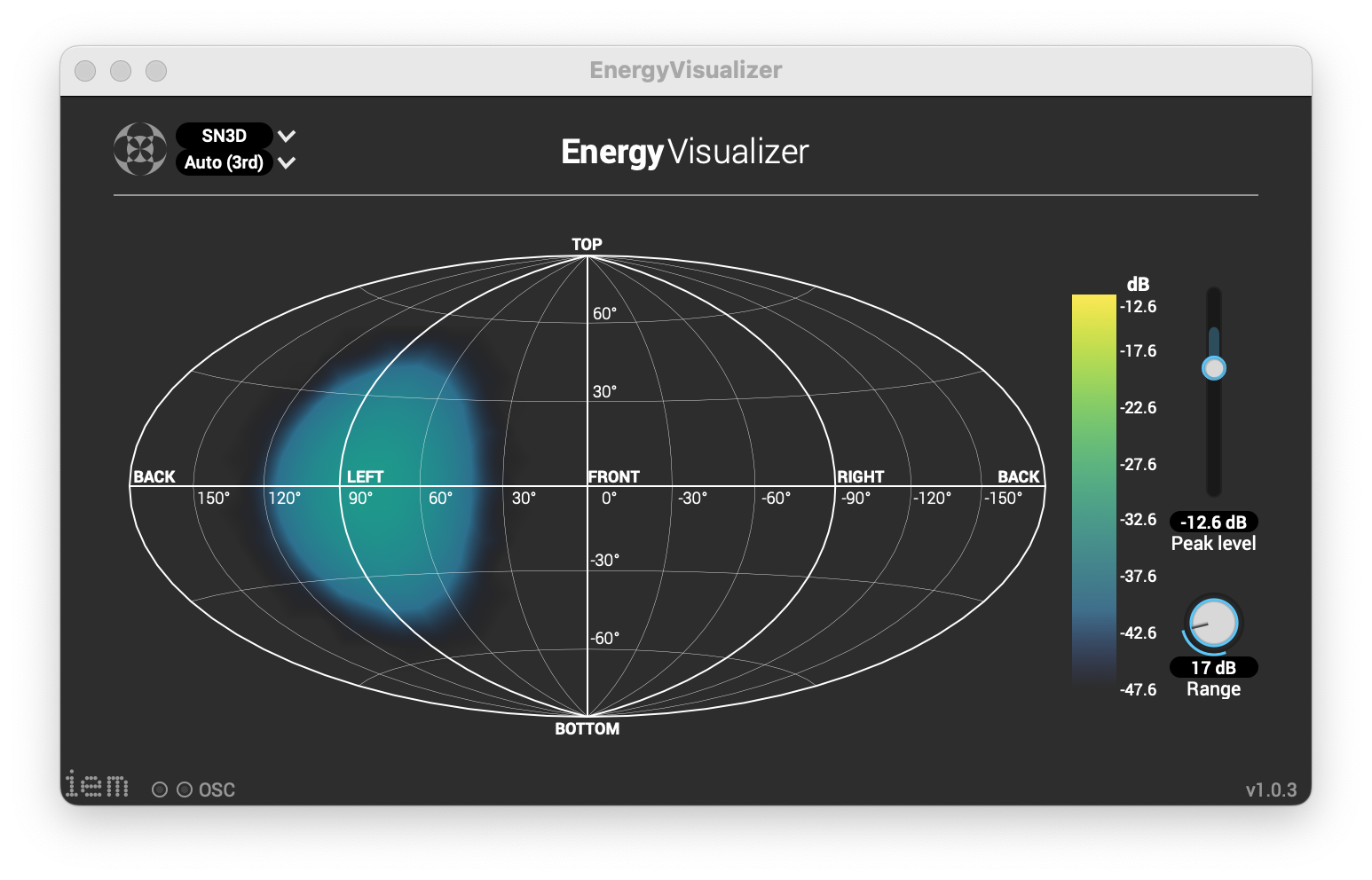

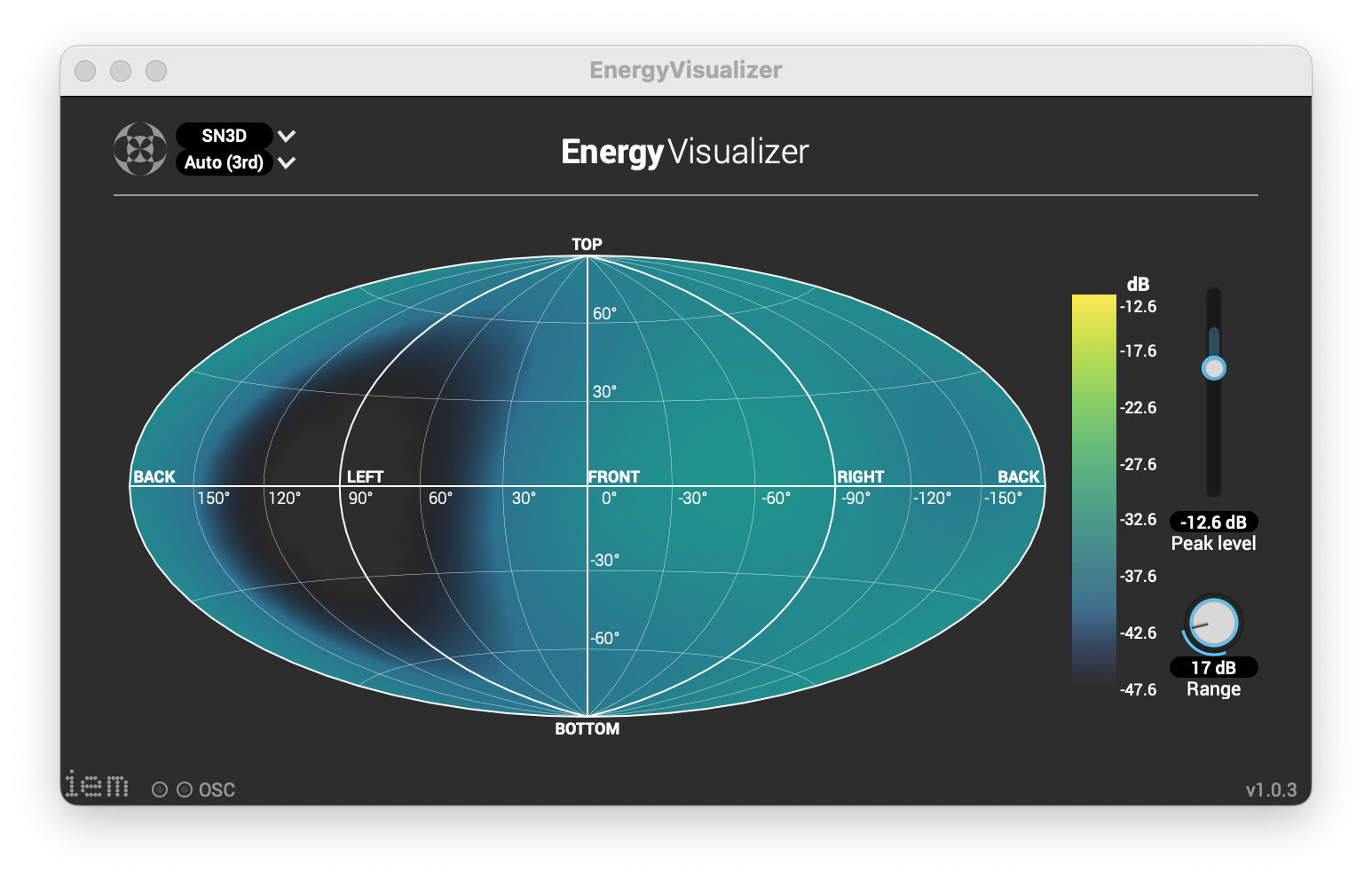

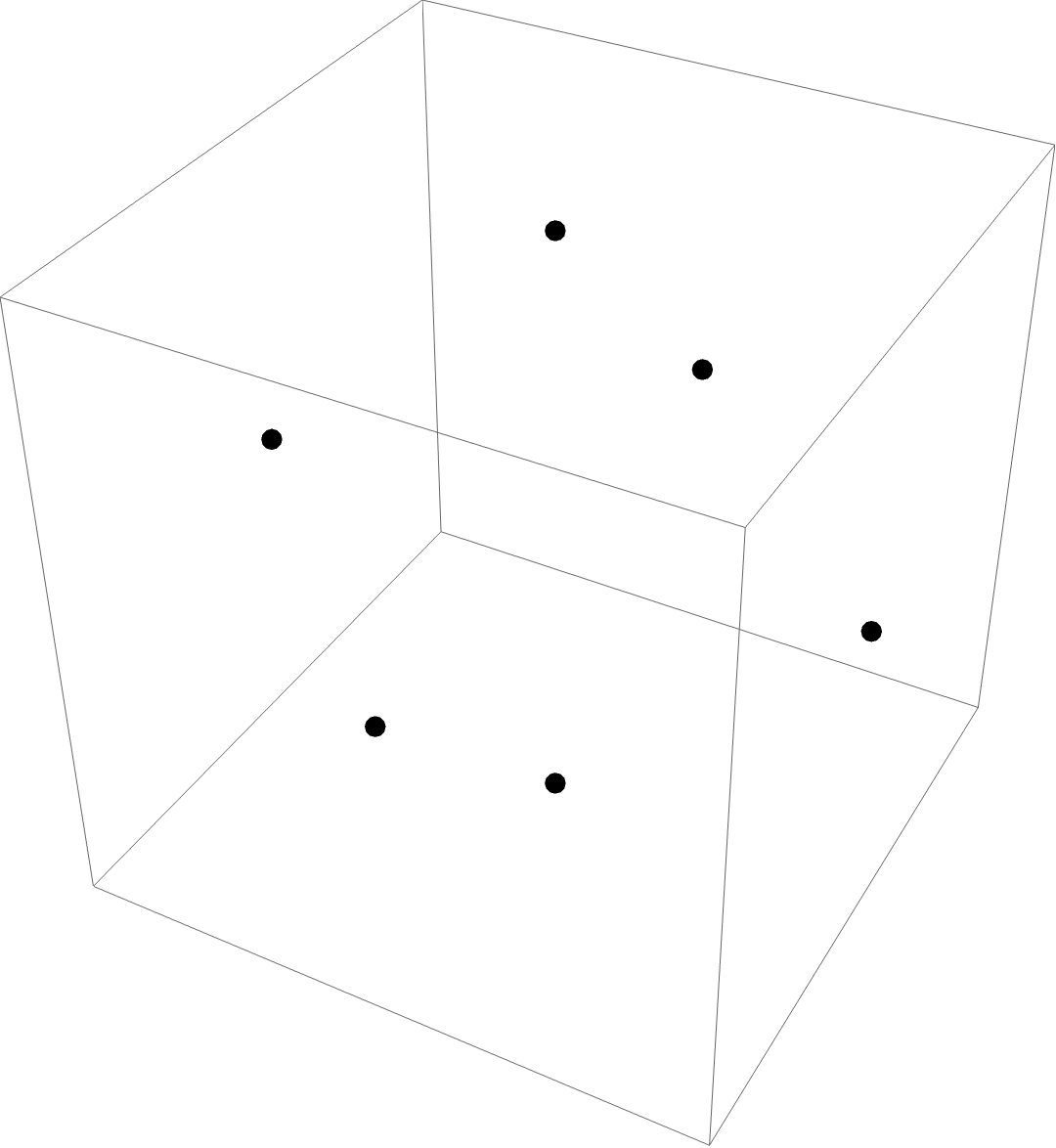

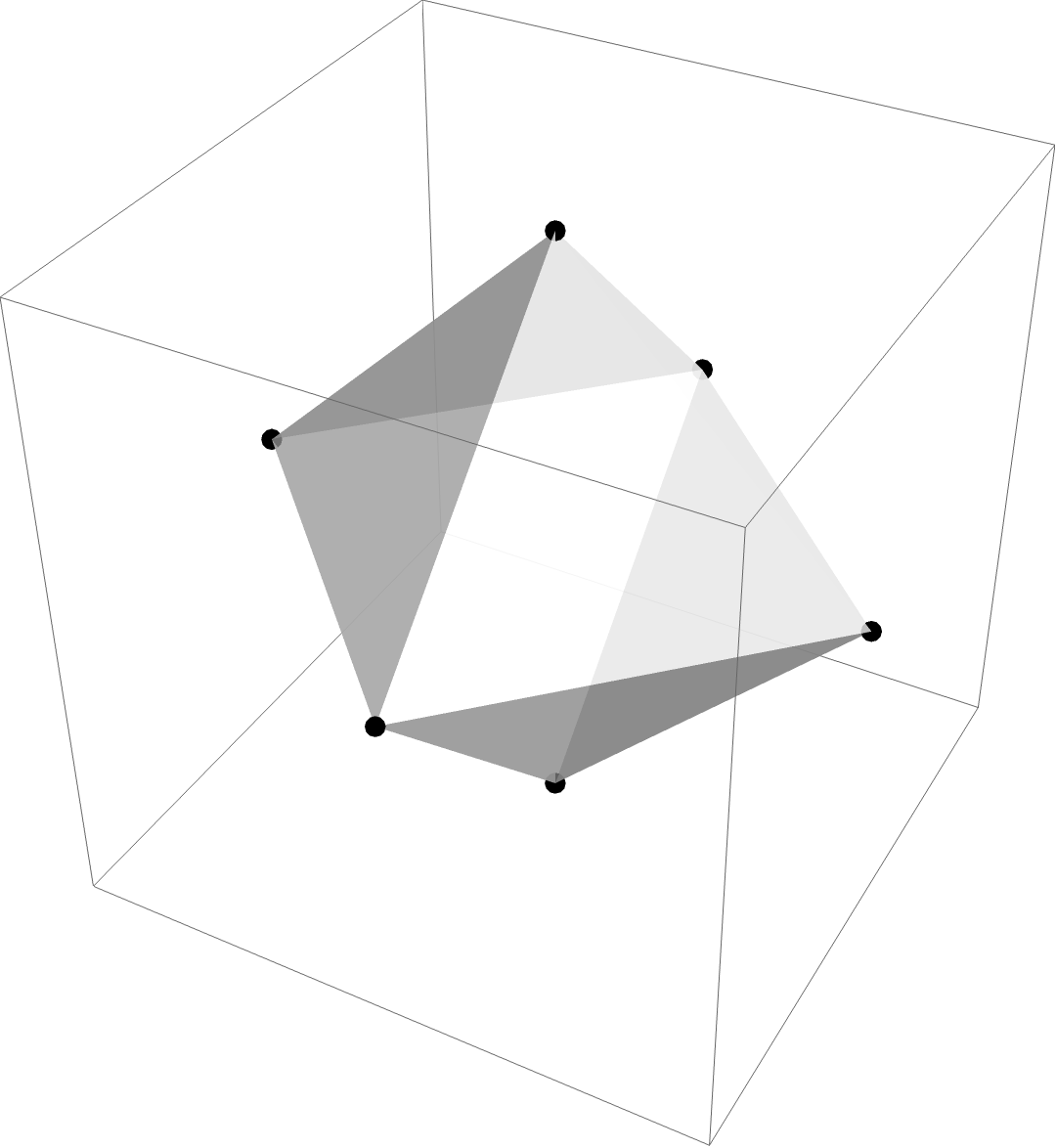

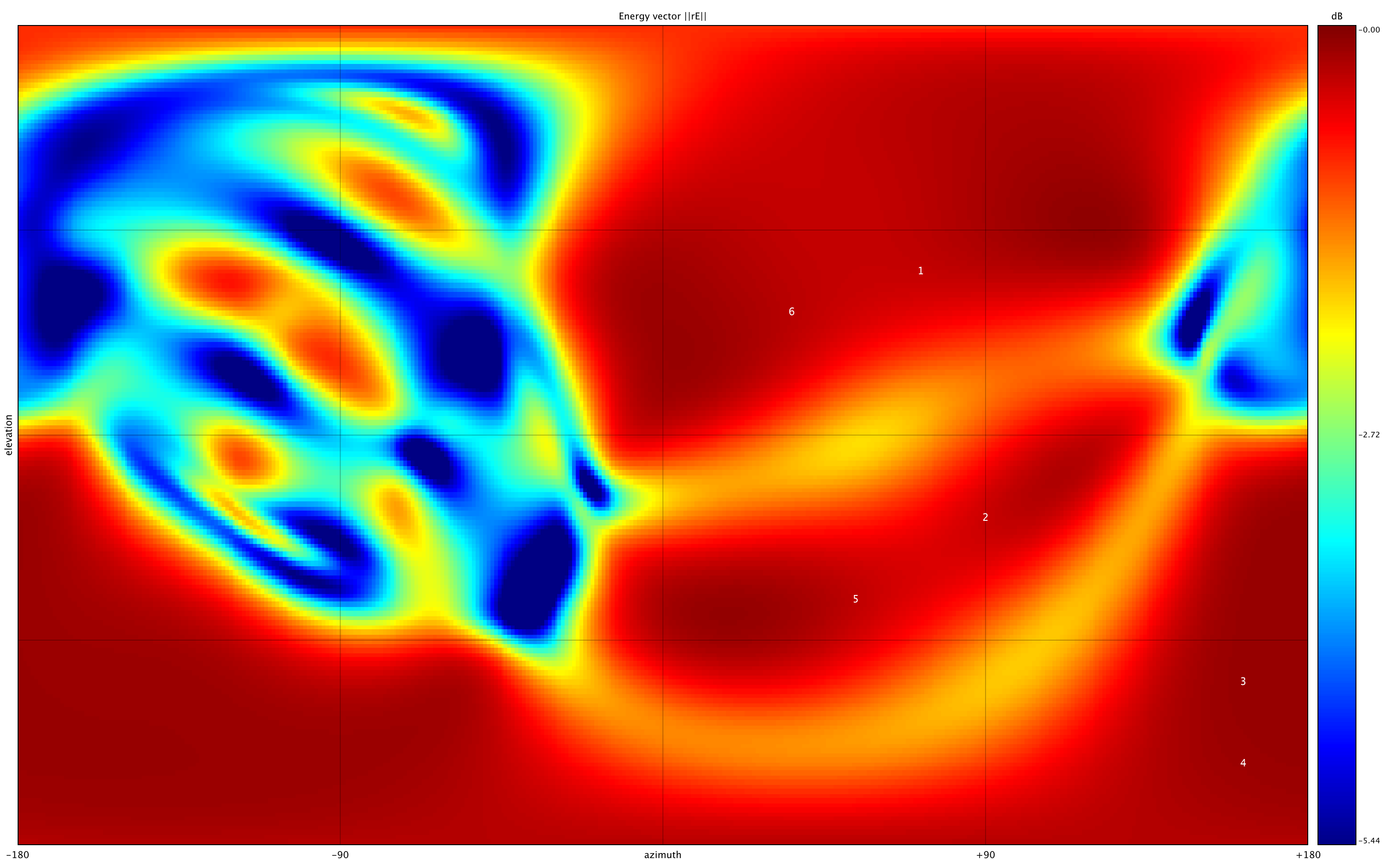

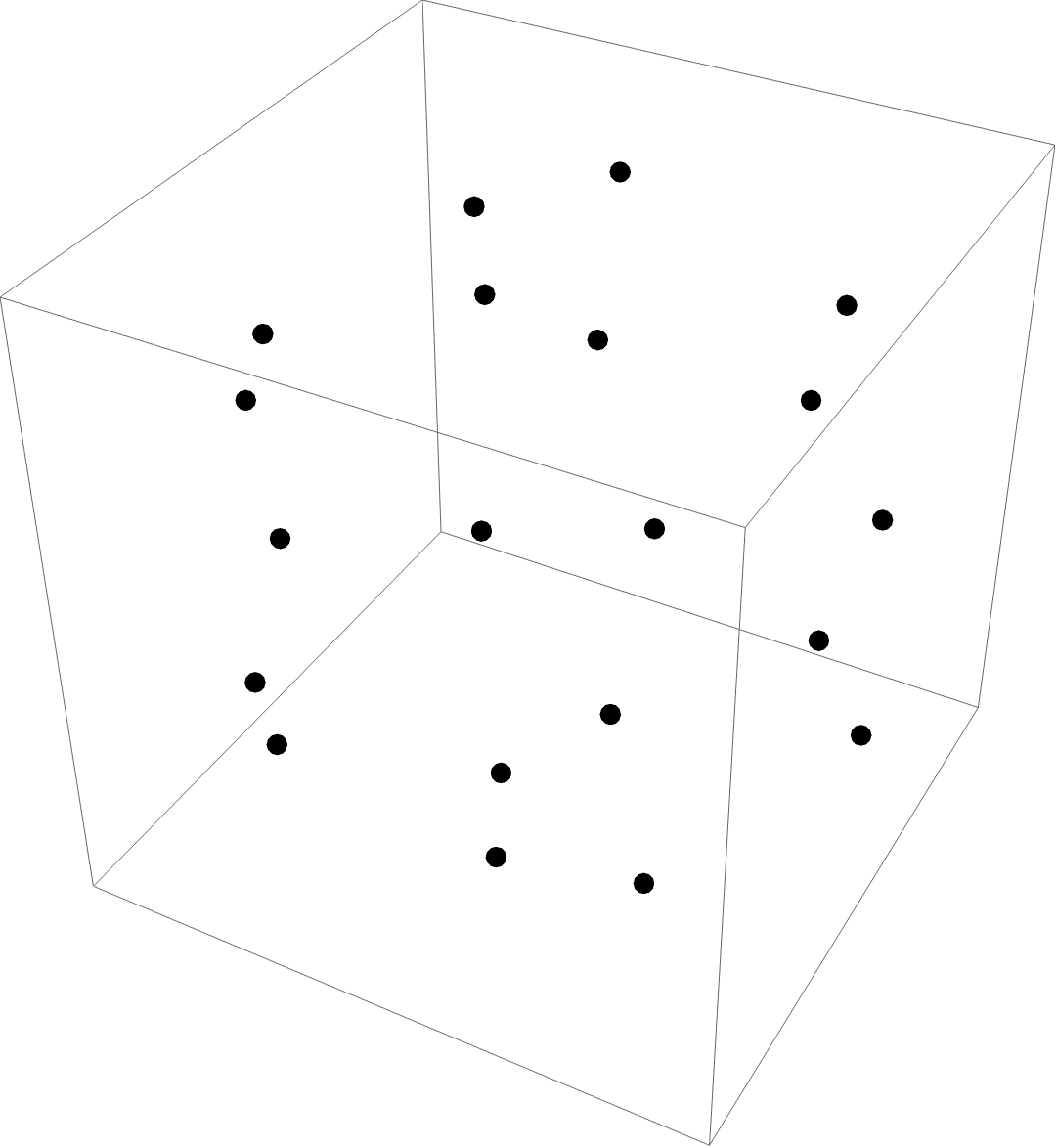

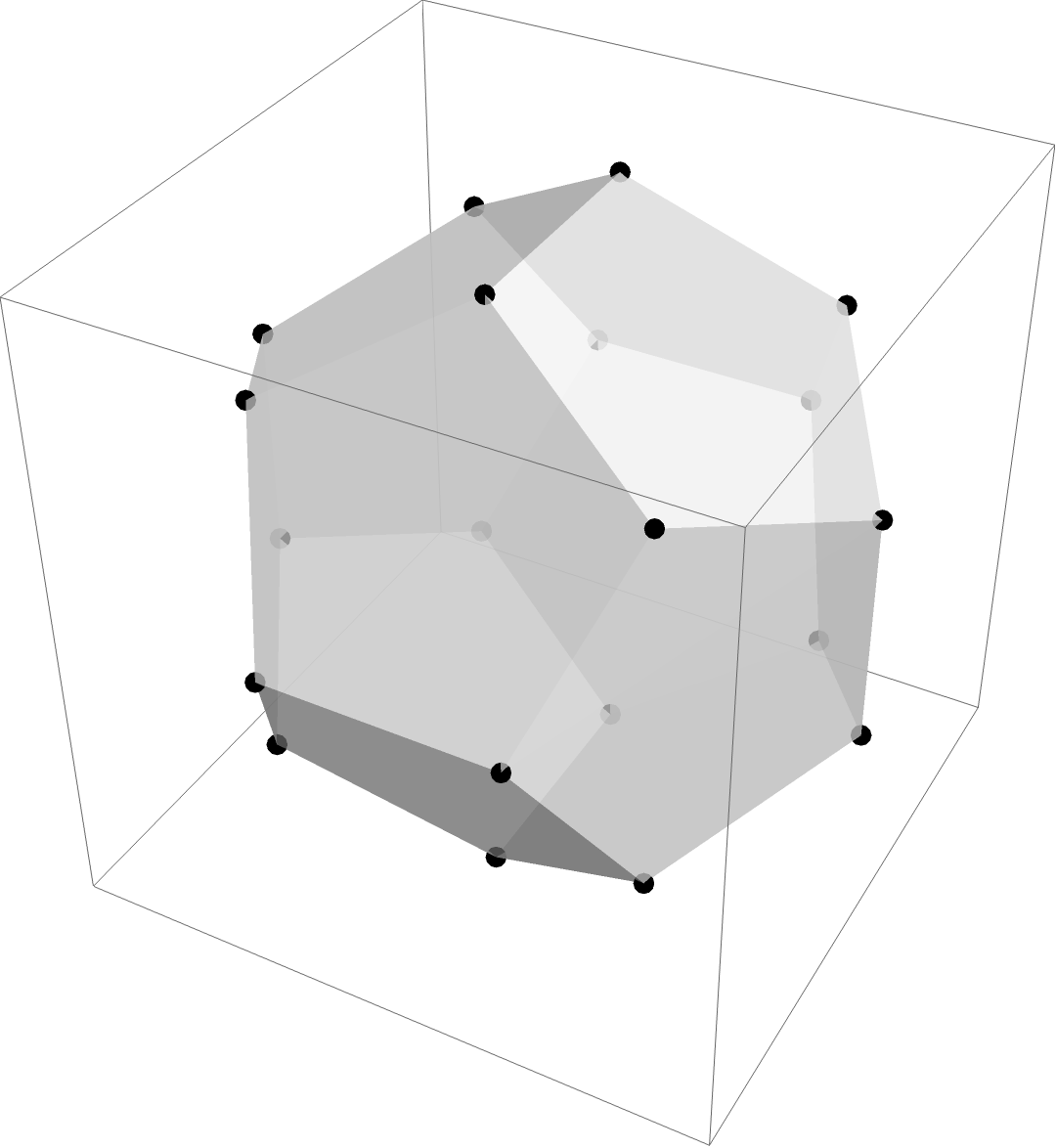

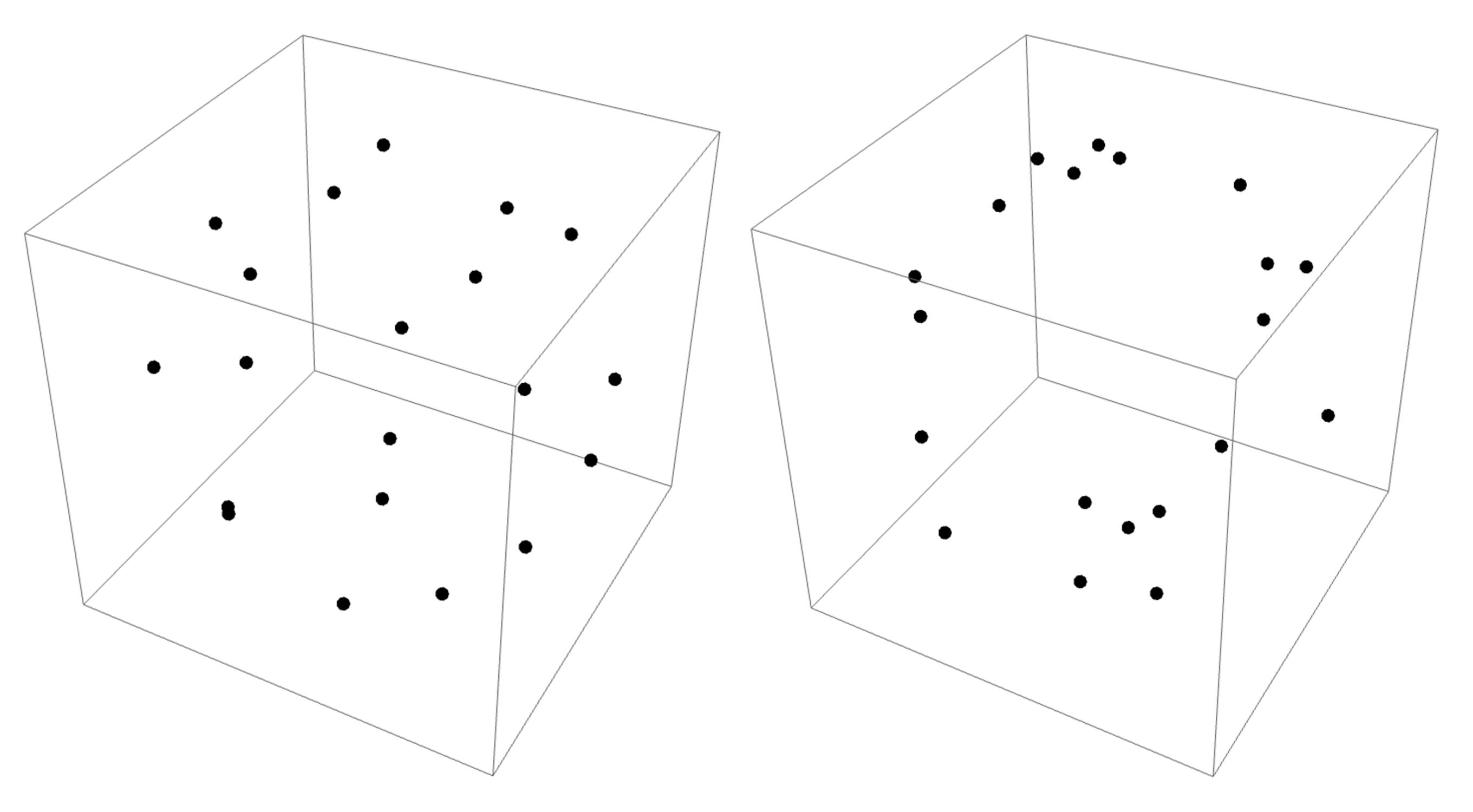

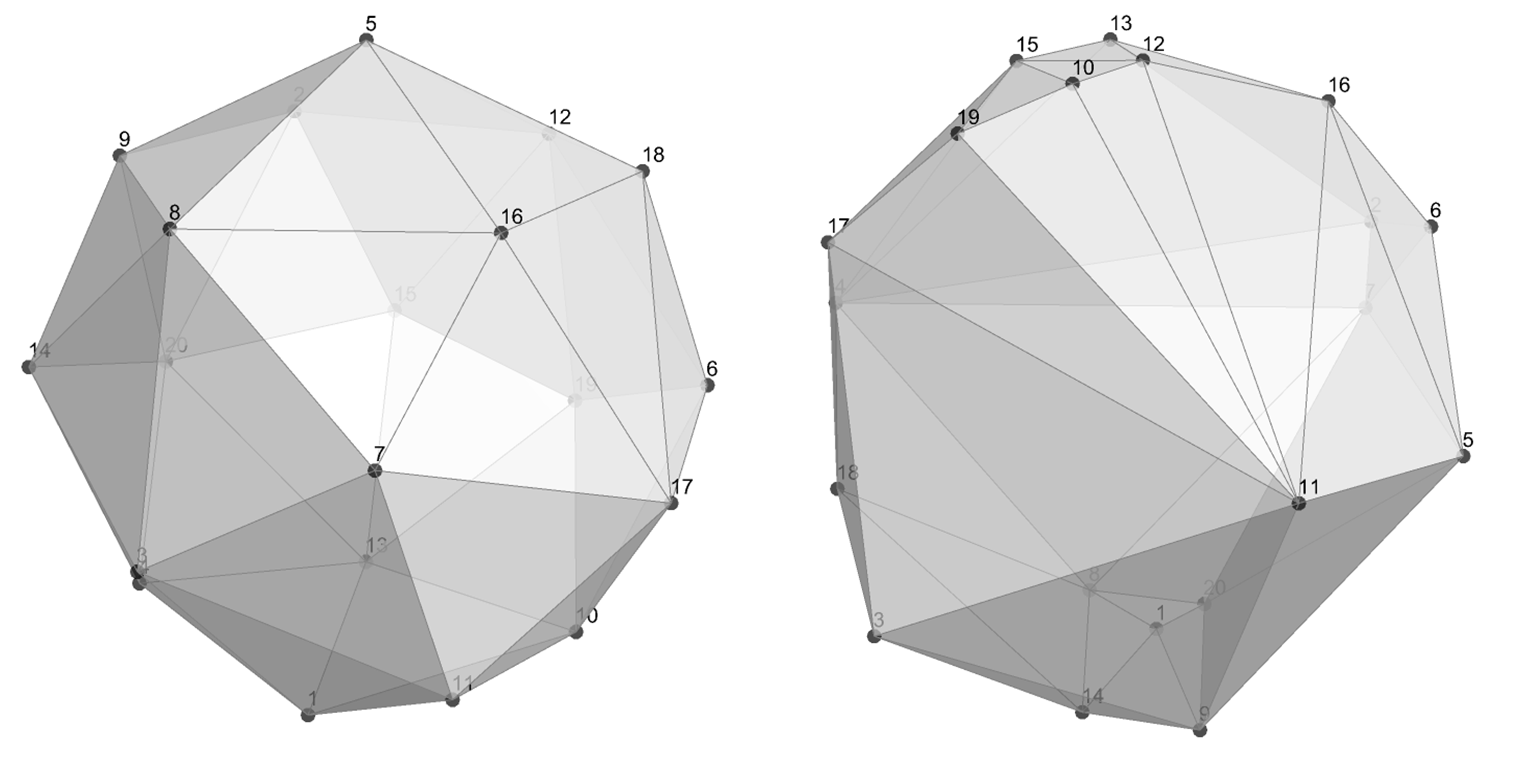

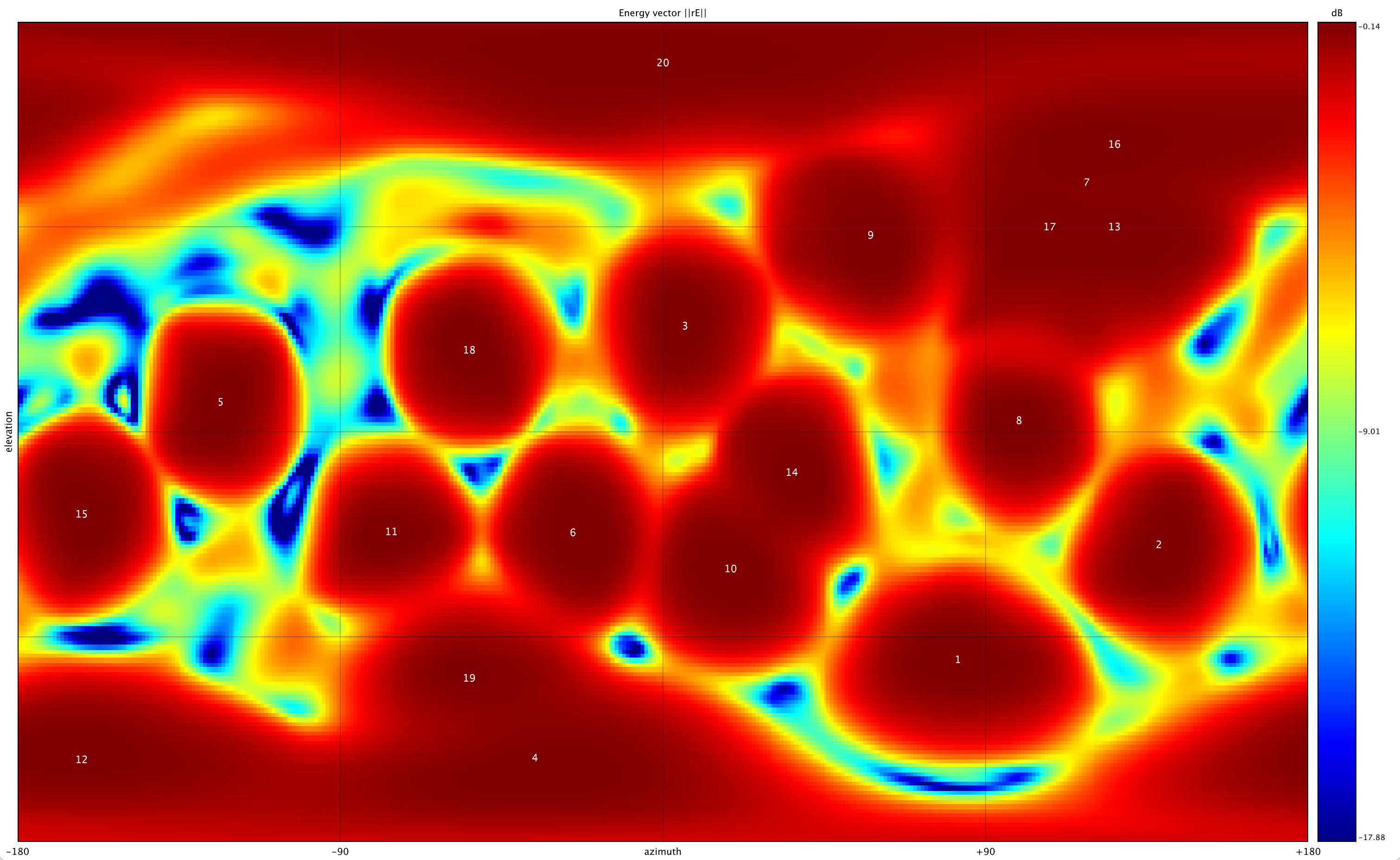

SFD relies on the polygon configurations that underpin HOA for virtual microphone/loudspeaker positions whereby each vertex represents an individual audio channel in the 3d decoding/encoding scheme. With SFD, these vertices are repositioned in uniform ways to create rotations and transpositions or nonuniform ways to create spatial artifacts and discontinuities, offering new immersive qualities.

SFD relies on the polygon configurations that underpin HOA for virtual microphone/loudspeaker positions whereby each vertex represents an individual audio channel in the 3d decoding/encoding scheme. With SFD, these vertices are repositioned in uniform ways to create rotations and transpositions or nonuniform ways to create spatial artifacts and discontinuities, offering new immersive qualities.

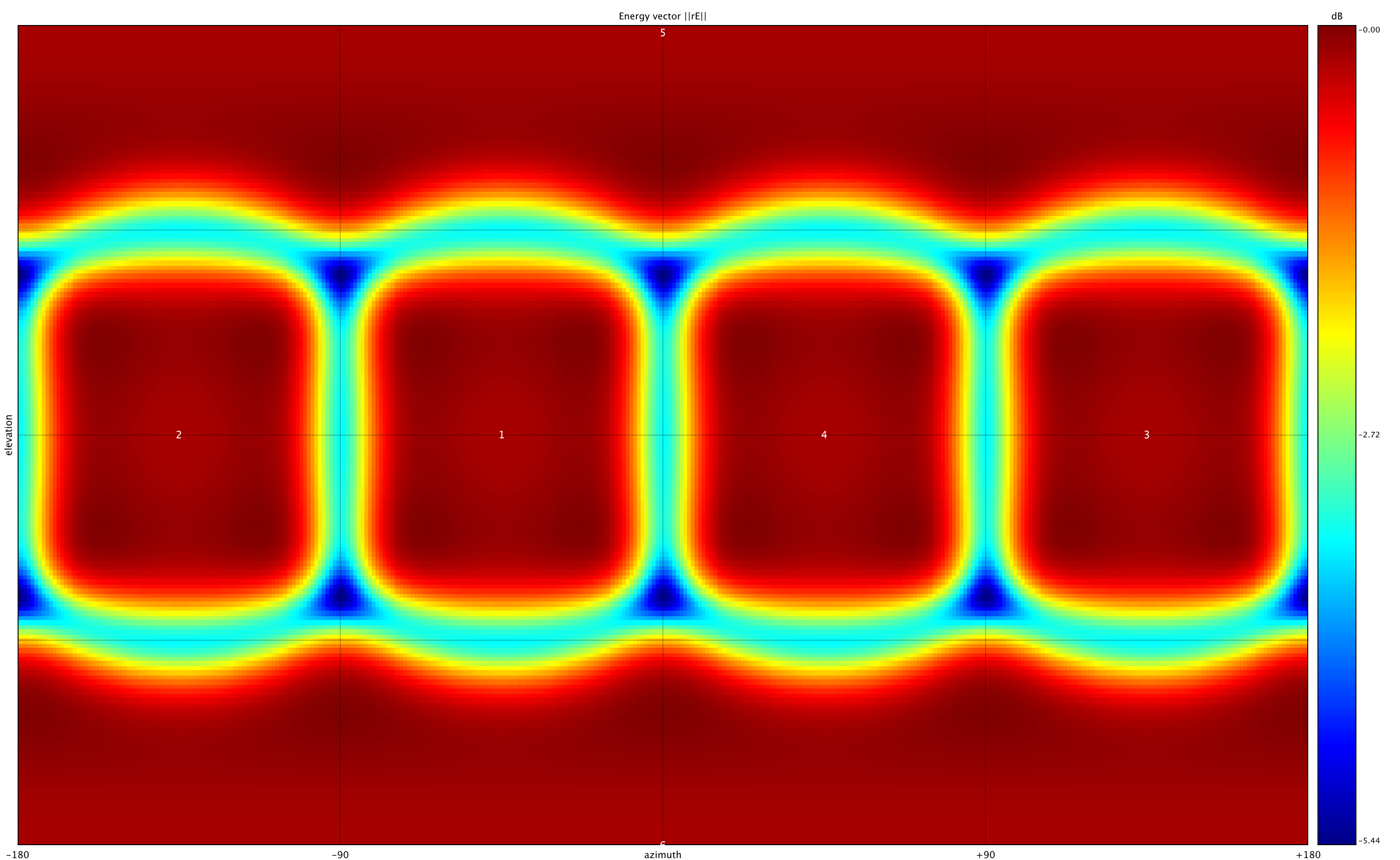

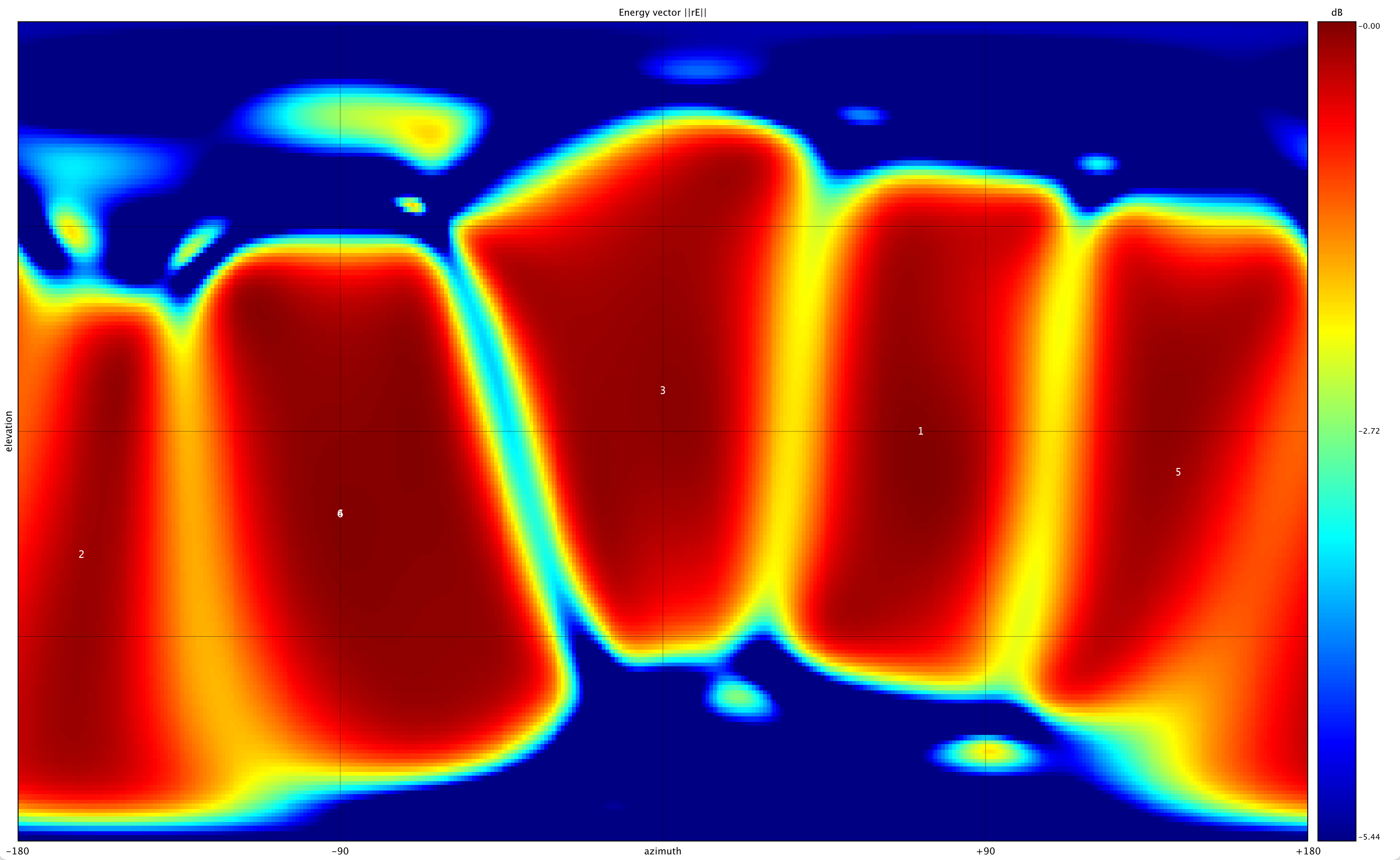

︎︎︎ 3d point, polygon, and energy vector representations of octahedral octahedral (6-channel) ambisonic coding

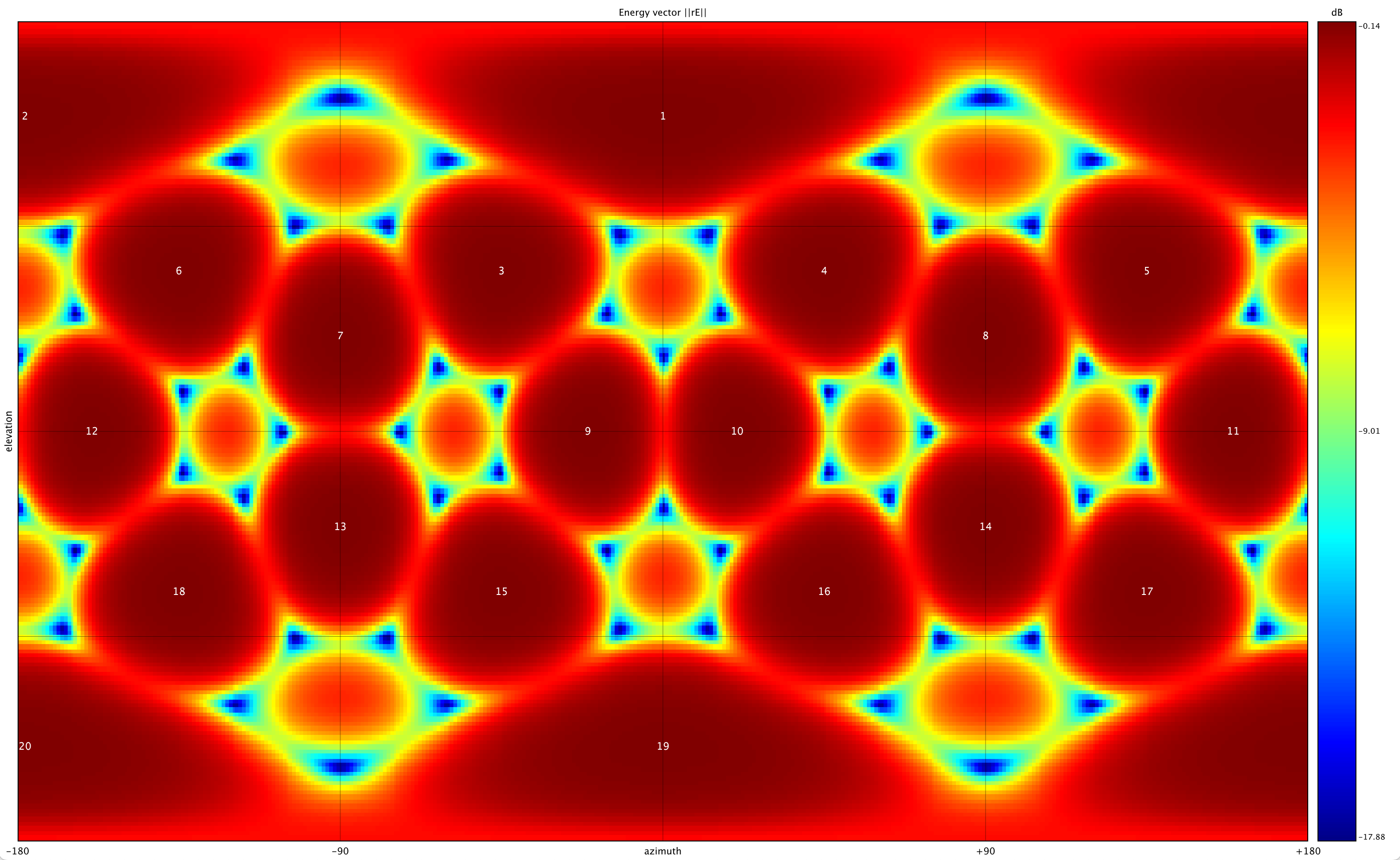

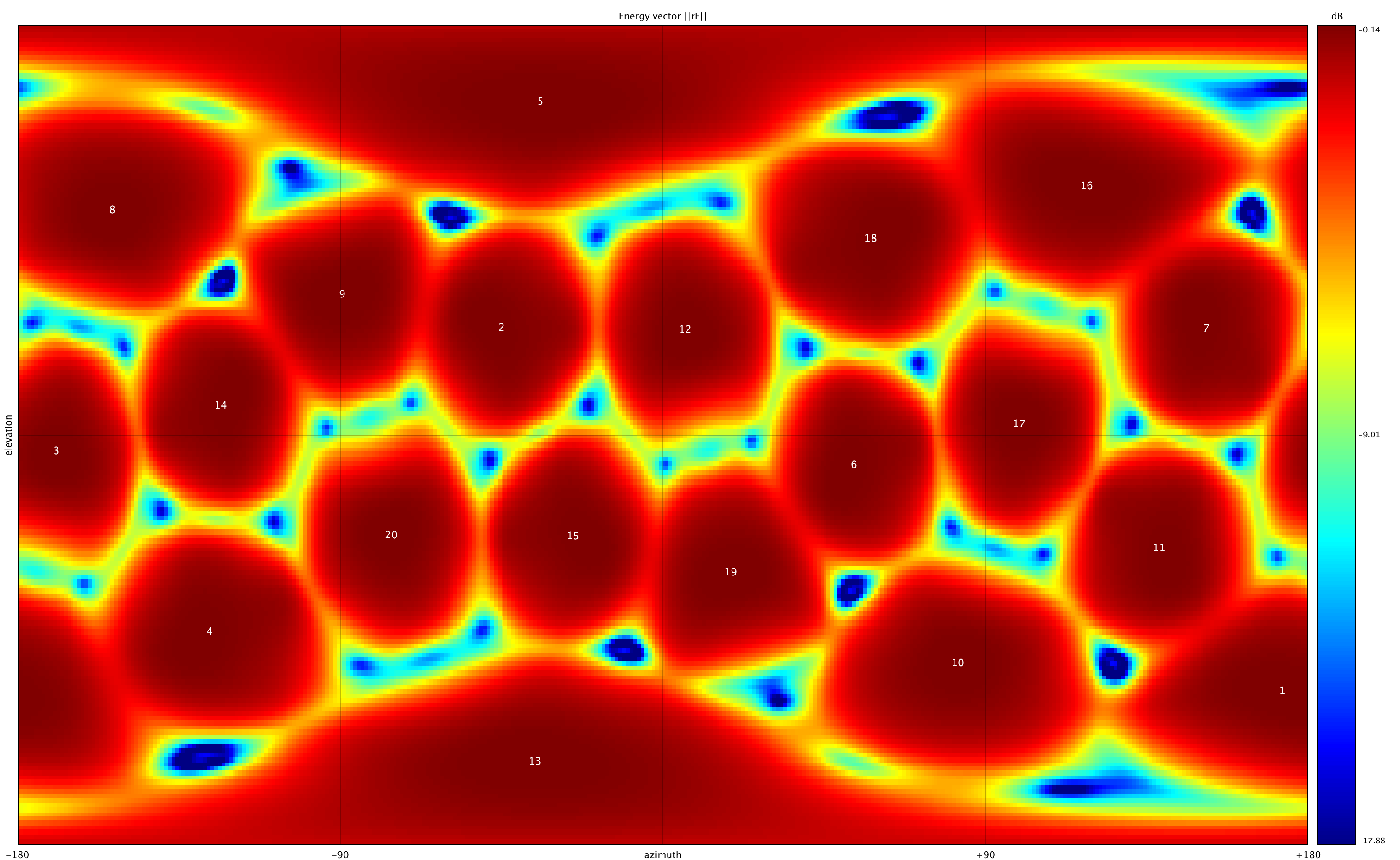

︎︎︎ Energy vector visualizations of Sound Field Displacement, 6-channel

︎︎︎ 3d point, polygon, and energy vector representations of octahedral (20-channel) ambisonic coding

︎︎︎ Energy vector visualizations of Sound Field Displacement, 20-channel

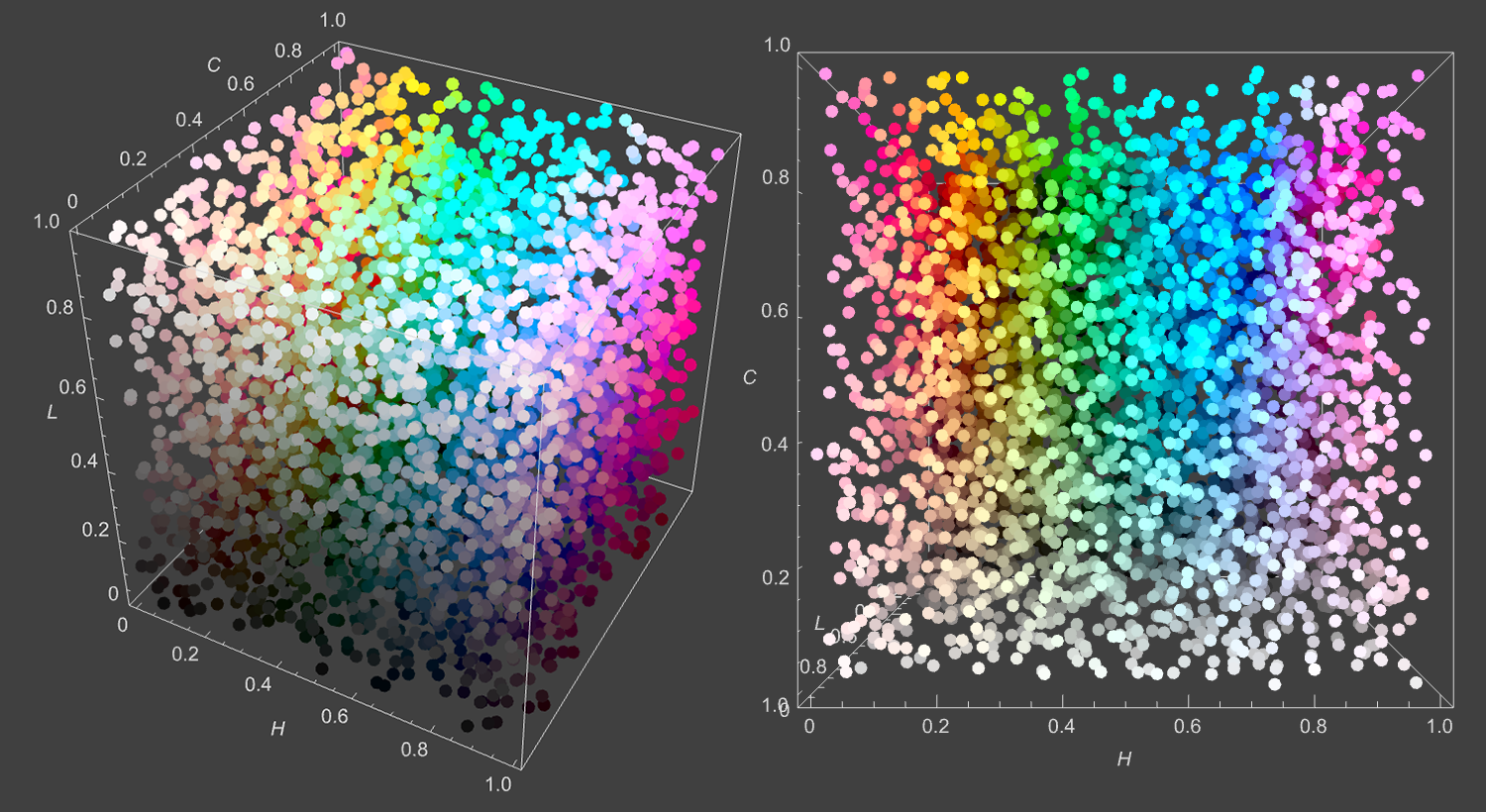

For SFD, I have created a link between color models and HOA exploring shared usage of spherical and cartesian coordinate systems. For instance, 3d color models such as Hue, Chroma, Luminance (HCL) can be mapped as XYZ spatial positions to place virtual microphones/loudspeakers for the HOA process. This repositioning of vertices based on color data gives composers a new means to graphically score or automate spatial parameters, thus providing an alternative to inadequate standard music notation systems and software compromises such as timeline-based, breakpoint editors. Furthermore, real-time visualization based on spherical coordinates (azimuth and elevation) is another aspect where salient color models can be applied to support sound design and musical analysis.