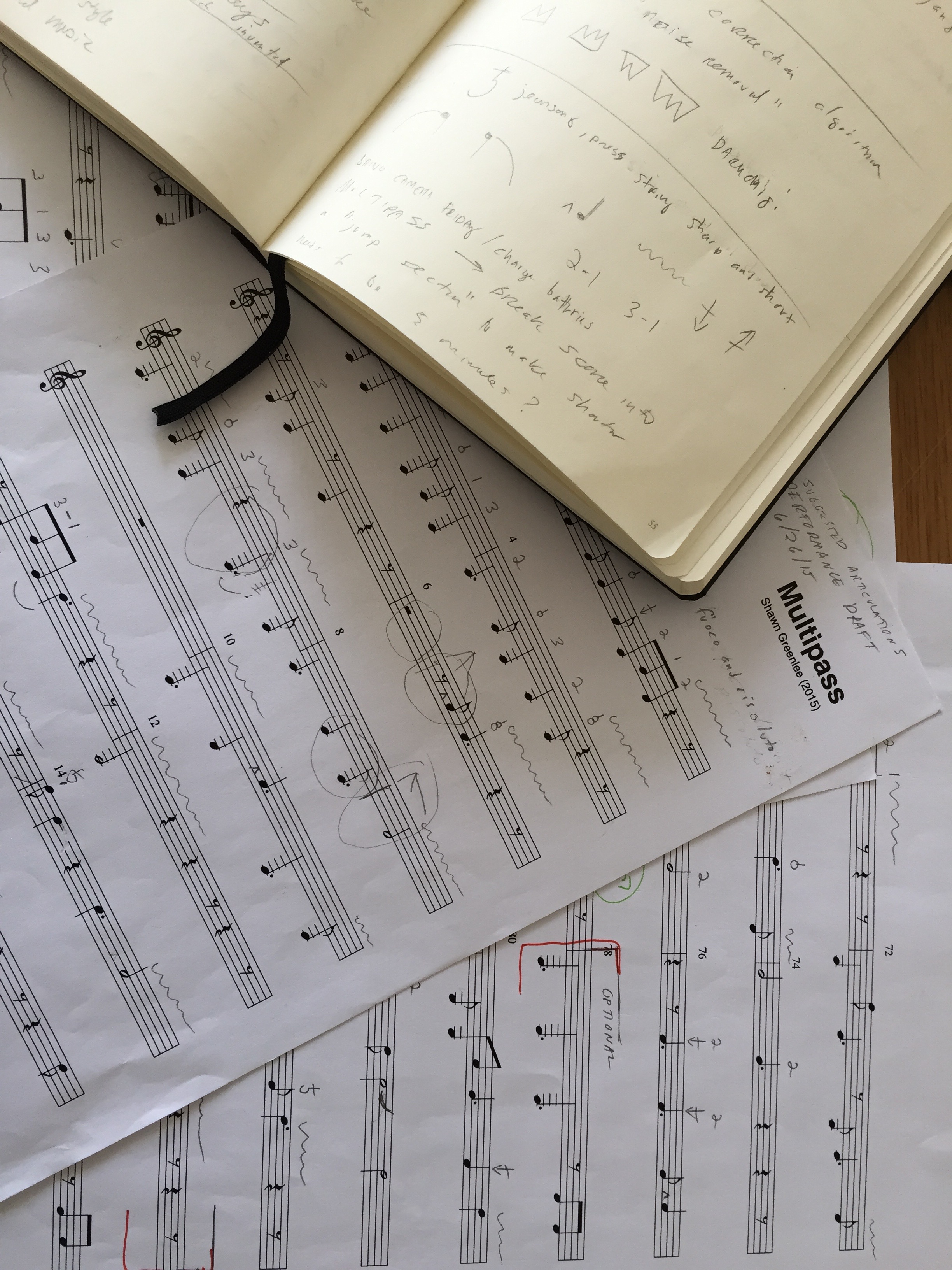

Multipass (2015)

︎︎︎ Kyungso Park performing Multipass,

Gyeonggi Creation Center in Daebudo

![]()

Gyeonggi Creation Center in Daebudo

Multipass is a piece for solo gayageum. It is based on the algorithmically generated score made for an earlier work

(Multipath). This adaptation was created during studies at the National Gugak Center in June 2015.

The title is a reference to digital file encoding where key perceivable aspects are retained, but some information

is inevitably lost.

![︎︎︎ Kyungso Park performing Multipass, National Gugak Center in Seoul]()

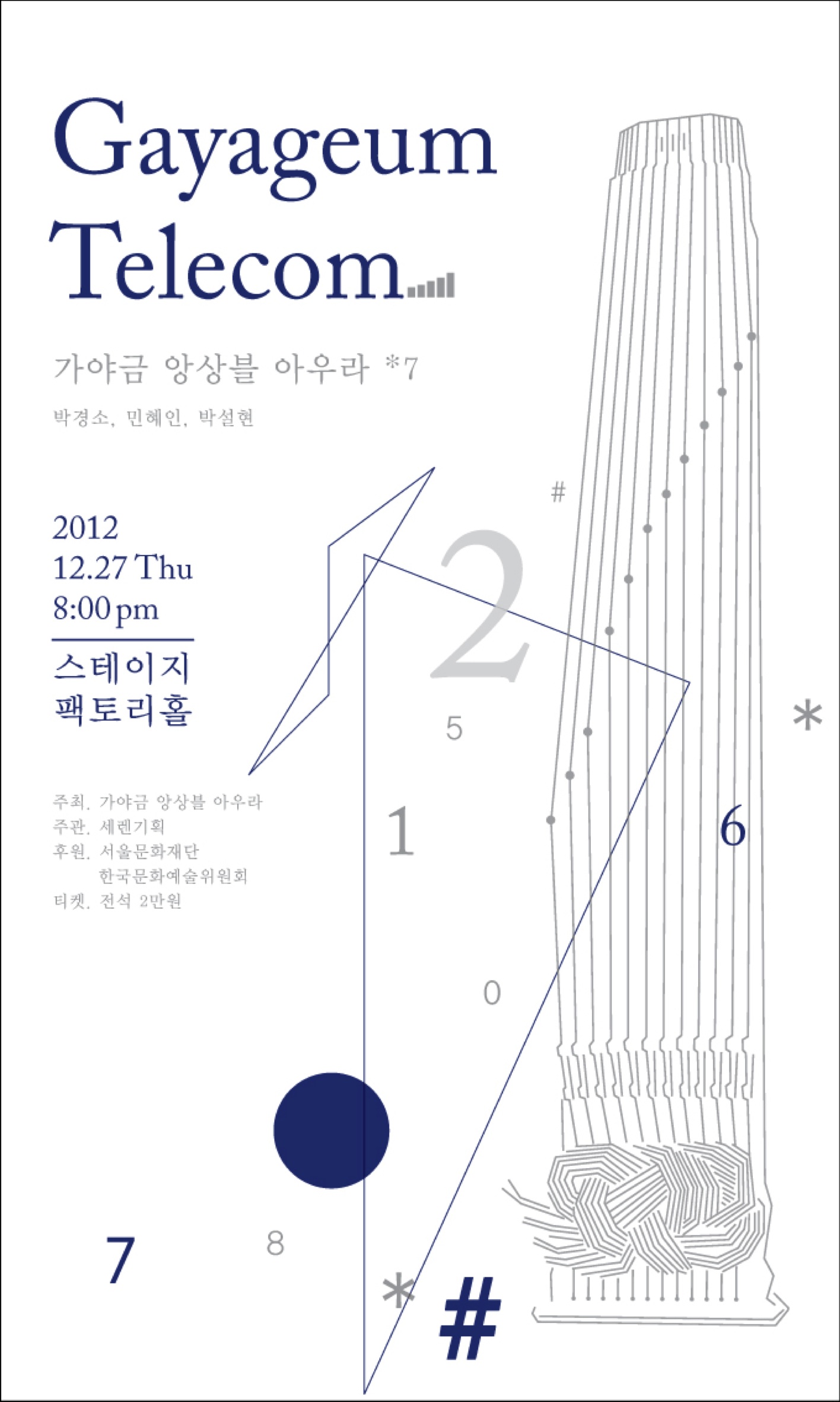

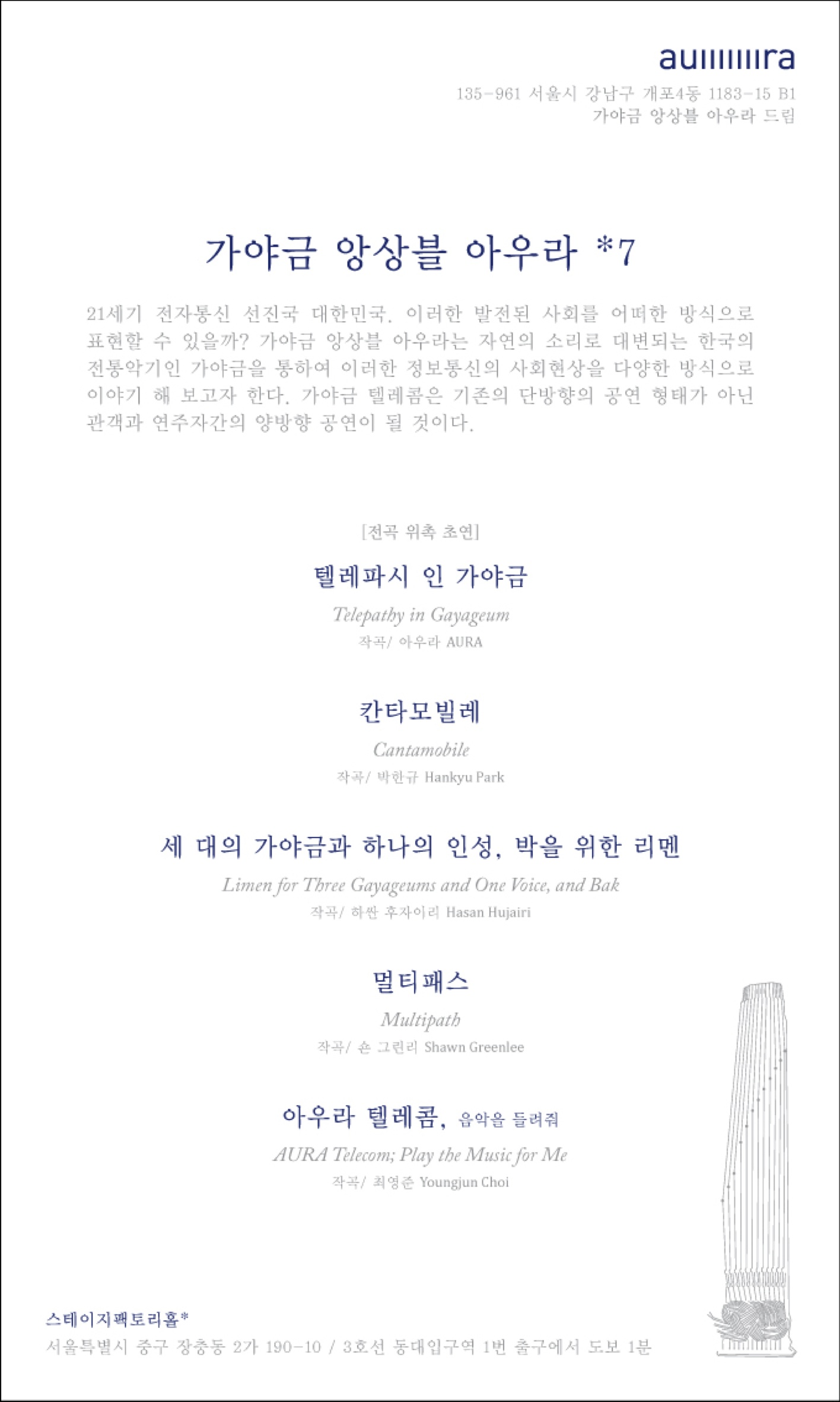

Multipath (2012)

My first work for the gayageum premiered on December 27, 2012 at the Welcomm Theatre in Seoul, South Korea. In the autumn of 2012, I was commissioned to create a new work on the theme of telecommunications for gayageum ensemble AURA (Kyungso Park, Hyein Min, and Seolhyun Park). I had two initial challenges. The first was to study the gayageum in order to learn its playing technique and history. The second was to find a way to interpret telecommunications principles as a compositional framework.

In learning about the history of gayageum notation, I found a starting point in the jeongganbo (jeongak) notation

system invented by King Sejong in 1445, considered to be Asia’s first notation system to use varying note shapes

and to indicate temporal durations. In this matrix form of notation, I found a conceptual link to the grids we

encounter everyday as the pixel arrays of digital screens, in the many ways we encounter them, on cell phones,

mobile devices, computers, and televisions.

During the research for this work, I studied various aspects of wireless telecommunications. I have been particularly

interested in phenomena that occur when transmitted signals do not arrive at the receiving end as intended, when

new images and sounds occur that usually cause us to change our position, move antennae, or finely tune a dial so

that we can better receive. One reason we may experience such interference is due to multipath propagation of

wireless signals. This is the origin of the piece’s title. In multipath propagation, a wirelessly transmitted

signal reaches a receiver by more than one path thereby causing interference due to near-simultaneous or time-

delayed occurrences of the same signal. Typically this phenomenon is caused by reflections off of bodies of water,

landmasses, and buildings. In a visual transmission, we might call these effects “ghosting” or “jitter.”

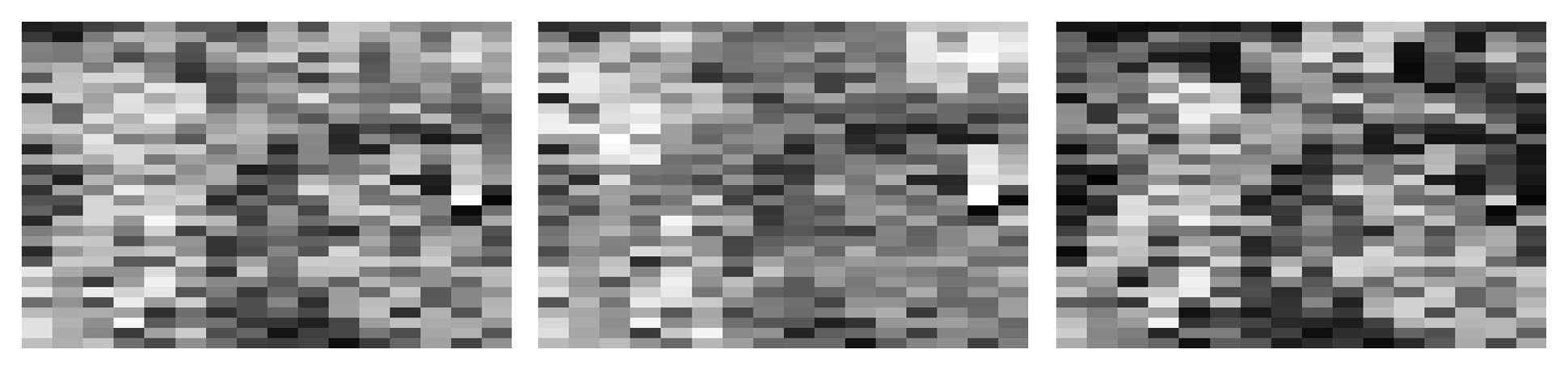

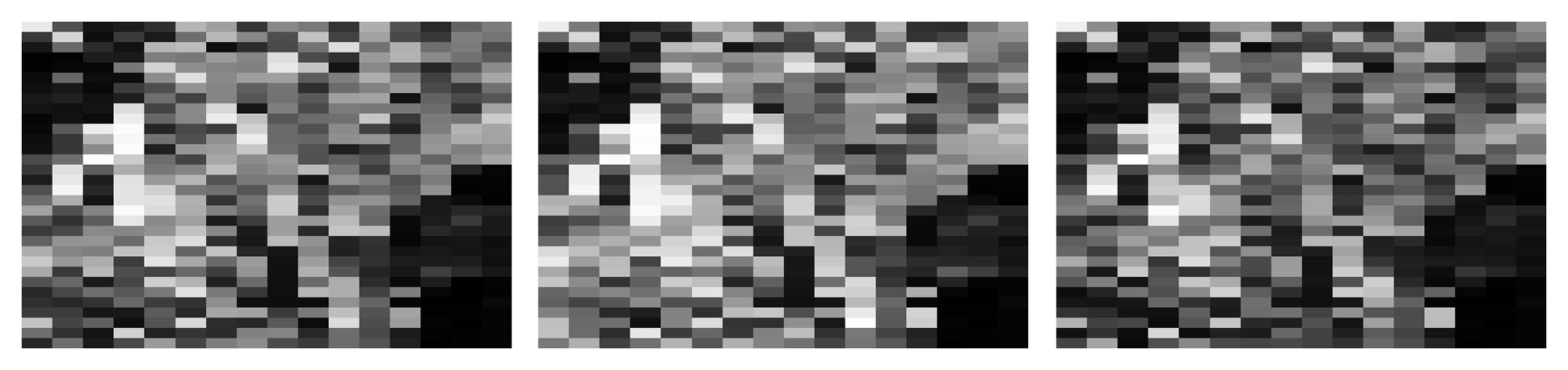

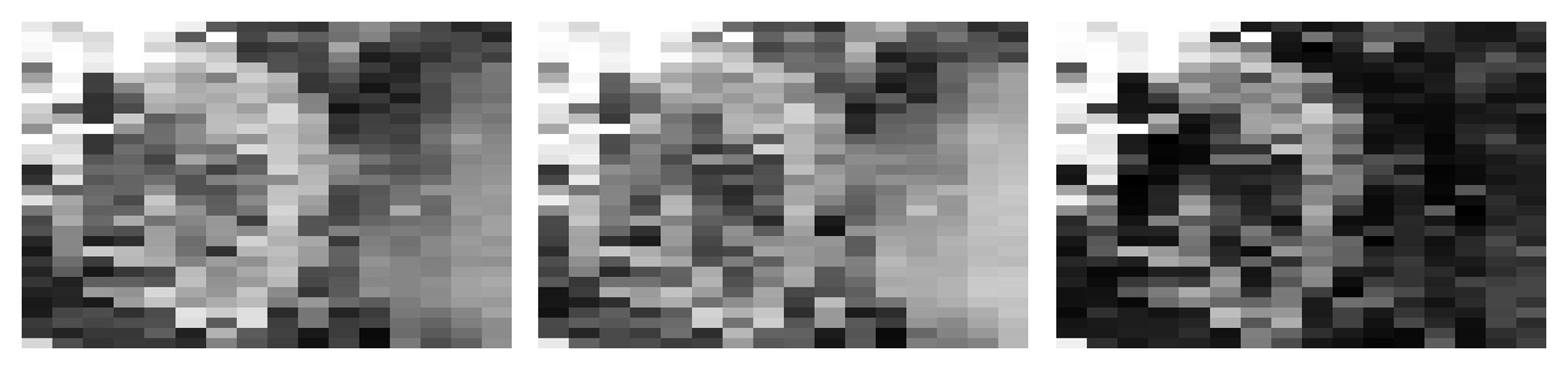

In order to make this concept audible, I composed a computer program that generates scores for individual

performers via an idiosyncratic data sonification procedure. I developed a way to translate a visual image into

musical passages, following a system where the image is reduced in resolution (pixilated or down-sampled) into a

grid of 16 x 32 pixels. Importantly, a full-color image is composed of red, green, and blue values, so I prepared

the translation with each player in AURA performing a different color channel. In simple terms, brighter color values within each channel produce higher pitches and longer durations; darker color values produce lower pitches

and shorter durations. The very darkest and very brightest values produce rests.

︎︎︎ Source images, downsampled versions and separated RGB channels

In the 16 pixels per row, 8 of these describe the pitch to be played with the 8 adjacent pixels describing the

duration for that note. After the 8 notes are complete, then the next row is played until the end of the image is

reached. Because each player is performing a different color channel, the pitches and durations for each performer

vary. Each player moves through rows at a different pace, rendering the sound.

The effect is that coordinated musical phrases are experienced as somehow time-delayed, a bit off. There is a shadowing, ghosting, or echoing process happening between the players (arising from the offset between color channels). The listener hears the image slowly, row by row, with the induced error apparent as a feeling of asynchronicity between players.

The effect is that coordinated musical phrases are experienced as somehow time-delayed, a bit off. There is a shadowing, ghosting, or echoing process happening between the players (arising from the offset between color channels). The listener hears the image slowly, row by row, with the induced error apparent as a feeling of asynchronicity between players.